Location

AR

AR

Badges

Activity

Challenge Categories

Challenges Entered

What data should you label to get the most value for your money?

Latest submissions

See All| graded | 179037 | ||

| graded | 179028 | ||

| graded | 179027 |

Amazon KDD Cup 2022

Latest submissions

Machine Learning for detection of early onset of Alzheimers

Latest submissions

See All| graded | 140406 | ||

| graded | 140404 | ||

| graded | 140400 |

3D Seismic Image Interpretation by Machine Learning

Latest submissions

See All| graded | 93328 | ||

| graded | 90382 | ||

| graded | 89726 |

Predicting smell of molecular compounds

Latest submissions

5 PROBLEMS 3 WEEKS. CAN YOU SOLVE THEM ALL?

Latest submissions

See All| graded | 93328 | ||

| graded | 90382 | ||

| graded | 89726 |

| Participant | Rating |

|---|---|

roderick_perez

roderick_perez

|

0 |

oduguwa_damilola

oduguwa_damilola

|

0 |

| Participant | Rating |

|---|

Data Purchasing Challenge 2022

[Utils] Final Stage model compilation

Almost 4 years agoHi all! I have compiled all 5 models from the final stage of the challenge. You can replace the code chunks presented in the notebook on the corresponding .py files and enjoy evaluating all pipelines in one run.

Hope you find it useful for testing your strategies. Please, let me know if you have any comments or doubts, and don’t forget to leave a like  !

!

[Discussion] Experiences so far on Phase 2?

Almost 4 years agoHi all!

How have been your experiences with the second part of the challenge?

On my side, the highlight has been fighting with the results std, I have experienced +0.04 F1 Score deviation for the same experiments.

Some approaches I have taken throughout the challenge have included:

- Variations of heuristic approaches from the Baseline: no big results beyond the maximum value for the Baseline, most of the experiments included changing the percentage of budget to be used.

- Heuristic approaches from Baseline + my own heuristic approaches: most of these included taking into account cooccurence between labels and weights/counts from each label in the training set.

- Training loss value for each image + image embeddings + PCA: this sounded promising at first, but local results didn’t add much value, still doing some further testing.

I have also noticed that one could heavily lay on golden seed hunting, but I think that with all the measures being taken, it looks like it doesn’t make much sense to follow this path.

Have you guys and gals been focusing mainly on heuristic approaches + active learning, or did you follow some interesting paths on data valuation, reinforced learning + novel approaches?

Looking forward to hear your experiences!

Baseline Released :gift:

Almost 4 years agoThis is great! Going to try plugging in some of my ideas (just when I was about to post my low tier baseline  ).

).

Image Similarity using Embeddings & Co-occurence

Almost 4 years agoHi all!

I have shared a new notebook with a co-occurence analysis over the labels for all the datasets. I have also made a brief analysis on image similarity using embeddings and PCA.

Have you used any of these ideas? Did you find them useful on your final score?

[Resources] Time To Focus On Purchase Phase Now

Almost 4 years agoMost of the method I’ve been looking to implement rely on probabilities (great resources by @leocd to get going if you haven’t implemented any btw).

But the other day, looking at the raw images I got the sense that much of them looked very alike. Maybe using some image similarity detection technique would help on not choosing twice (or more) images that have some kind of score that says they should be selected to be labelled, when they are actually almost the same image.

Still far from reaching that level of implementation, but maybe someone finds it doable/useful.

Brainstorming On Augmentations

Almost 4 years agoI was thinking about 3 today, I have always been training over the already trained model, but adding more probability to the random augmentations. Will try re-training from pre-trained weights and see how it goes.

Brainstorming On Augmentations

Almost 4 years agoNot sure I understood, you mean you are directly feeding pre-processed images (i.e. enhanced images) or the other way around?

Brainstorming On Augmentations

Almost 4 years agoNN’s tend to learn most of non-linear transformations, so generally (with the right amount of epochs and data) they should be able to learn most of the ones used in image processing. But I agree that, from an empirical point of view, one should expect things like sharpening, etc, should help the model on detecting scratches more easily.

Another thing to note is that most of us are using pre-trained models, so surely there is something to tell about how much that affects on using this kind of pre-processing we are talking about.

Brainstorming On Augmentations

Almost 4 years agoYes, I think that there might be some added value in doing some pre-processing. Also, I remember @sergeytsimfer giving a detailed explanation why most of the seismic attributes (basically image pre-processing) were of no added value because the NN should be able to learn that kind of transformation solely from the images, so I’d prefer to go with the common augmentations, and if the runtime is good, maybe add some pre-processing to test what happens.

Brainstorming On Augmentations

Almost 4 years agoOn augmentation I haven’t gone beyond the things you described. I tried to think of the universe of possible images for this kind of problem and, probably same as you did, things like Crop don’t seem to be the best approach.

I haven’t been working with changing the colors of the images yet (say RandomContrast, RandomBrightness. Do you think they add to the model generalization? Couldn’t it be that the channels (i.e. colors) add information for the model? (took the title for the thread very seriously here though lol)

[Explainer + Baseline] Baseline with +0.84 on LB

Almost 4 years ago

This is great! Thanks for the advice! I was having the issue of not being able to implement the pre-trained weights and found the solution, clearly not the most elegant one

[Explainer + Baseline] Baseline with +0.84 on LB

Almost 4 years agoHi all! I have added a new submission for the Community Prize including a Baseline that achieves +0.84 in the LB.

Hope you find it useful!

Kudos to @gaurav_singhal for his initial Baseline that helped me get through creating a submission.

Learn How To Make Your First Submission [🎥 Tutorial]

About 4 years agoYesterday I was able to set it up myself, really hope I would have digged the discussion further to find this  .

.

Baseline submission

About 4 years agoGreat addition! Thanks for this, for those of us who are not too experienced, it is always useful seeing other people’s implementation.

What did you get so far?

About 4 years agoSame here, still trying to figure out how to plug something without breaking everything!

ADDI Alzheimers Detection Challenge

Community Contribution Prize 🎮 Gaming consoles 🚁 Drones 🥽 VR Headsets

Almost 5 years agoHello there!

Amazing Challenge as usual! Question regarding the submissions: do they need to be created on Python or R? e.g. is it possible to create a Power BI report, or Tableau dashboard, etc, that helps on the EDA? Long story short, is it mandatory to also create these Community Challenge submissions in the Aridihia Workbench?

Thanks!

Seismic Facies Identification Challenge

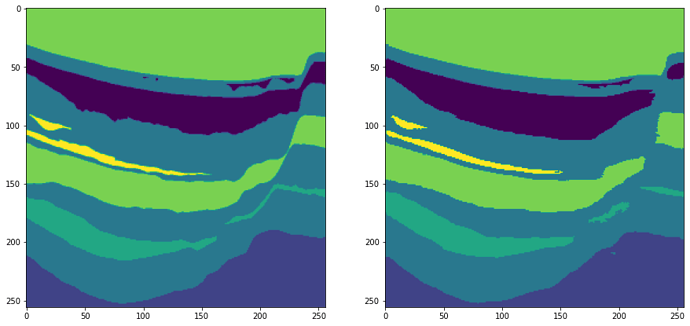

[Explainer]: Seismic Facies Identification Starter Pack

Over 5 years agoHi there!

The notebook has been updated, its END-TO-END solution throws an F1-score of .555 and an accuracy of .787 aproximately.

Of course this could be enhanced, but keep in mind that the objective of this notebook is to explain the process in an image segmentation problem and to keep the reader on topic on what it’s being done!

Hope some of you find it useful! Don’t forget to like this topic!

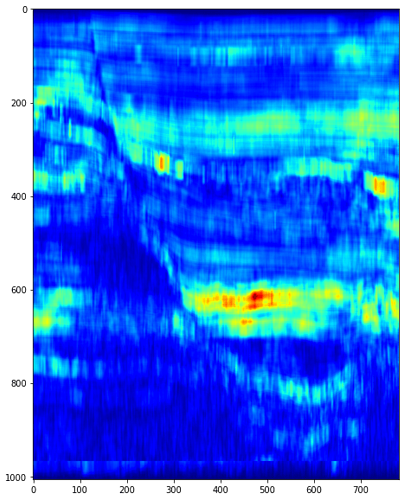

[Explainer] Need extra features? Different input approach? Try Seismic Attributes!

Over 5 years agoI am currently considering implementing coherency with a certain window so that the reflector packages (similarly behaving reflectors) get bunched up in some big blanks on the image. This would clearly miss out on the sand channels that have small developments on the seismic cube, but should enhance the definition of big chunks of facies. I’ve seen @sergeytsimfer colab (amazing work btw) on how the NN should be able to detect and learn the ‘augmentation’ provided by seismic attributes, but does this really stand when you’re changing the image radically? Does it stand when you are stacking these different ‘augmentations’ in different channels? I’ve seen a small improvement when using the seismic attributes, but can’t really say it is a confirmed improvement since I didn’t keep working on them (trying to get the model right first). I believe this is a nice discussion to have! I’d love to see your work on coherency @sergeytsimfer .

@leocd are you using an image approach or an ndarray approach? (Maybe a ‘too soon’ question though lol)

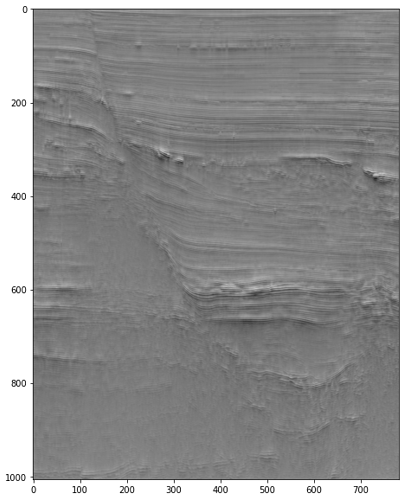

[Explainer]: Seismic Facies Identification Starter Pack

Over 5 years agoJust a simple notebook

Hi there! I am a recently graduated geophysicist from Argentina. I got into Data Science and Machine Learning just a few months ago, so I’m certainly an inexperienced little tiny deep learning practitioner as you may well guess.

Here are some questions you may ask yourselves…

What is this challenge?

This challenge tries to overcome something that has troubled the Oil&Gas industry for so many years: how can I interpret such huge amount of information in so little time?

Seismic facies are one of the abstractions that are regularly used to compress the amount of information. We define that certain patterns in the seismic image are defined by the response of a certain layer, this layer, when grouped with several other layers with the same characteristics, tend to generate a response that stands out from other patterns in the seismic image.

This common response helps grouping these layers that behave similarly, and thus helping reducing the amount of information on image.

But how do we group all these patterns together?! Well, Deep Learning to the rescue!

Let’s dive in:

What did you do?

The last few hours I’ve tried to put together a simple notebook that goes from showing some simple seismic attributes, to implementing a deep learning model. I hope that if you are starting just like me you’ll find it useful. And if you are an already experienced machine learning practitioner, you might find some insights on how to improve your results!

A couple of examples from the notebook:

TECVA seismic attribute

RMS seismic attribute

How did you do it?

I started by using my seismic attributes knowledge, then I tried to think of a way of implementing different kinds of information, and finally, I used the keras-image-segmentation package that you can find here: https://github.com/divamgupta/image-segmentation-keras to produce a model, train it, and generate the final predictions.

Sample image of the results of training the net (label set / trained set):

Well, but I could have done that on my own!..

Well, of course you could! But beware that I also try to give an insight on some facts over using this seismic dataset and how you may improve your results by taking this into account. Maybe it will help you!

I don’t really know about these seismic facies and stuff…

Well, maybe you just like to watch at some random guys’ notebook and you’ll probably like those nice images!

LINK TO THE NOTEBOOK: https://colab.research.google.com/drive/1935VS3tMKoJ1FbgR1AOkoAC2z0IO9HWr?usp=sharing

You can also get in touch via Linkedin (link to the post with this notebook)!:

Hope you guys find it useful, funny and maybe consider giving it a thumbs up!

See you around!

Notebooks

-

[Utils] Round 2 Final Model compilation Compilation of the Last Stage's 5 final modelssantiactis· Almost 4 years ago

[Utils] Round 2 Final Model compilation Compilation of the Last Stage's 5 final modelssantiactis· Almost 4 years ago -

[Analysis + Utils] Labels co-occurence & Image Similarity Labels co-occurence analysis & Image Similarity using image embeddingssantiactis· Almost 4 years ago

[Analysis + Utils] Labels co-occurence & Image Similarity Labels co-occurence analysis & Image Similarity using image embeddingssantiactis· Almost 4 years ago -

[Explainer + Baseline] Get your Baseline right! (+0.84 LB) High Accuracy Baselinesantiactis· Almost 4 years ago

[Explainer + Baseline] Get your Baseline right! (+0.84 LB) High Accuracy Baselinesantiactis· Almost 4 years ago -

End-to-End Simple Solution (9 Models + Data Imbalance) In this simple notebook I provide an End-to-End Solution with 9 models + data imbalance optionssantiactis· Almost 5 years ago

End-to-End Simple Solution (9 Models + Data Imbalance) In this simple notebook I provide an End-to-End Solution with 9 models + data imbalance optionssantiactis· Almost 5 years ago -

[Explainer] Just a simple Video-Notebook - Starter Pack Video version of Just a Simple Notebooksantiactis· Almost 5 years ago

[Explainer] Just a simple Video-Notebook - Starter Pack Video version of Just a Simple Notebooksantiactis· Almost 5 years ago -

[Explainer]: Seismic Facies Identification Starter Pack keras-image-segmentation package to produce a model, train it, and generate the final predictions.santiactis· Over 5 years ago

[Explainer]: Seismic Facies Identification Starter Pack keras-image-segmentation package to produce a model, train it, and generate the final predictions.santiactis· Over 5 years ago

[Announcement] Leaderboard Winners

Almost 4 years agoCongratulations everyone! Same as @tfriedel, really curious about solutions, this challenge was quite unpredictable!