Location

Kyiv, UA

Kyiv, UA

Badges

Activity

Challenge Categories

Challenges Entered

Revolutionising Interior Design with AI

Latest submissions

See All| failed | 251634 | ||

| graded | 251621 | ||

| graded | 251606 |

Trick Large Language Models

Latest submissions

Small Object Detection and Classification

Latest submissions

Understand semantic segmentation and monocular depth estimation from downward-facing drone images

Latest submissions

See All| graded | 218909 | ||

| submitted | 218901 | ||

| graded | 218897 |

Identify user photos in the marketplace

Latest submissions

See All| graded | 216437 | ||

| graded | 216435 | ||

| failed | 216348 |

A benchmark for image-based food recognition

Latest submissions

See All| graded | 181483 | ||

| graded | 181374 | ||

| failed | 181373 |

A benchmark for image-based food recognition

Latest submissions

| Participant | Rating |

|---|

| Participant | Rating |

|---|

-

Lab_3i Food Recognition Benchmark 2022View

-

seg-dep Scene Understanding for Autonomous Drone Delivery (SUADD'23)View

-

rank-re-rank-re-rank Visual Product Recognition Challenge 2023View

-

StableDesign Generative Interior Design Challenge 2024View

Generative Interior Design Challenge 2024

Submission stuck at intermediate state

Almost 2 years agodear @aicrowd_team, @mohanty and @dipam, could you please help us this this issue?

Submission stuck at intermediate state

Almost 2 years agowe have same situation today with submit #251537, please check it as well…(

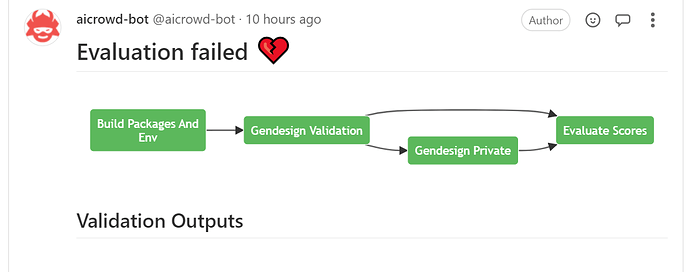

Submission failed

Almost 2 years agoAlso, @dipam, @aicrowd_team, our submissions still waiting for scoring (more than 1 day), could you please help with this also.

UPD: submissions started scoring, so the problem is gone. Thanks.

only problem is left with failed submission.

Submission failed

Almost 2 years agofor submission submission_hash : 4f831f9a590dc4cc00ce56490653a6e4bdb2a20e

I have get failed status, however all steps are done successfully and also no error.

@dipam, could you please check this submission and fix it if possible.

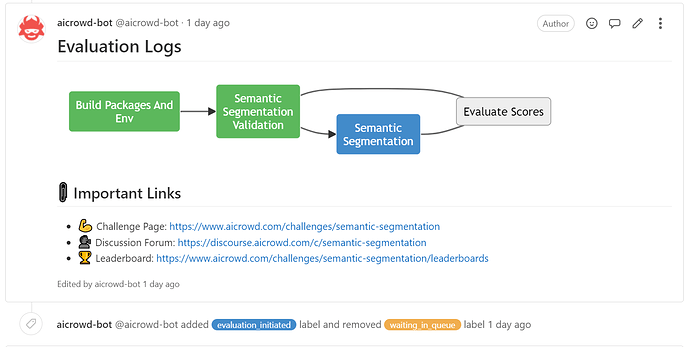

Semantic Segmentation

Same submissions with different weights failing

Almost 3 years ago@dipam , could you please pay attention that earlier this problem solved just by resubmitting, now I have faced similar situation, that my submits (without any changes) failed is some cases, in other cases submission just stuck for 10hours or even more than 24 hours.

Visual Product Recognition Challenge 2023

My solution for the challenge

Almost 3 years agoWe have tried TRT before inference in our server. We also use reranking on GPU, but maybe this was longer…

even with VIT-H + reranking our solution was almost 10min, cause in some cases it failed and in some cases run successfully, depending on hardware.

My solution for the challenge

Almost 3 years ago@bartosz_ludwiczuk, Congratulations on achieving second place! I’m looking forward to reading about your winning solution.

My solution for the challenge

Almost 3 years agoWe attempted to incorporate multiple external datasets into our experiments, spending considerable time trying to train our VIT-H model jointly with Product10k and other datasets, as well as training on other datasets and fine-tuning on Product10k. Surprisingly, despite our efforts, our current leaderboard score was achieved only by using the Product10k dataset; all other datasets resulted in a decrease in our score.

To improve our results, we utilized re-ranking for postprocessing, which gave us a marginal improvement of approximately 0.01%. Additionally, we experimented with convnext and VIT-G models, which boosted our local score by about 0.03%. However, even with the use of TensorRT, our models were unable to pass inference in 10 minutes.

📥 Guidelines For Using External Dataset

Almost 3 years agowe have tried:

rp2k

JD_Products_10K

Shopee

Aliproducts

DeepFashion_CTS

DeepFashion2

Fashion_200K

Stanford_Products

right now we are using only Products_10K, and models from OpenClip.

Product Matching: Inference failed

Almost 3 years ago@dipam , have you changed some settings of the server for inference?

Previously I have faced near 1 failed submitting per day, just rerunning helps.

however today I have changed only NN weights files and thats all, 1 get 1 submit ok, and 4 other weights with same size, same model all same just other epochs - failed.

As to me it is very strange…

Could you pls check it?

#214502

#214483

#214469

If it is timeout, how it could be if other weights are ok, or just resubmitting sometimes helps?

Product Matching: Inference failed

Almost 3 years ago@dipam, could you please check

#212567

#212566

It is failed in step “Build Packages And Env”, however I have changed only NN params.

As to me it is very strange…

Is everybody using same Products 10k dataset

Almost 3 years agoI don’t know about other competitors (could only assumed), but we use only this dataset, and it is sufficient.

So working on model, training pipeline, etc. provide the possibility to get LB=0.62114.

Mono Depth Perception

Round1 and Round2

Almost 3 years ago@dipam , thank you for clarification, now for me it looks understandable!

Round1 and Round2

Almost 3 years ago@dipam, could you please provide further details about the current situation? It appears that there is some confusion regarding the status of rounds 1 and 2. Additionally, updating the dataset at this stage of the competition seems unusual.

To clarify, it seems that round 1 has been completed, but there was no mention of it in the initial description. It is unclear what this means for the competition as a whole.

Furthermore, updating the dataset at this point in the competition seems questionable, especially if the previous dataset was used for calculating the leaderboard score. It is uncertain whether a new dataset will be used for the leaderboard calculation, and if so, how this will affect the competition.

Food Recognition Benchmark 2022

End of Round 2⏱️

Almost 4 years agoThanks to Aicrowd for hosting this yearly competition and benchmark. It was a lot of fun working on it, exploring and learning models for instance segmentation for solving the task on food recognition.

Thanks for my teammates for this work.

Thanks @shivam for a lot of helping us with aicrowd infrastructure, @gaurav_singhal for your paper on your previous year best approach and unbelievable race on score ![]() other participants for good time within this competition!

other participants for good time within this competition!

Also, congratulations to @gaurav_singhal and @Camaro!!!

Extension of the round?

Almost 4 years agoI understood, so the problem was with countdown. Thank you for clarification

Top teams solutions

Almost 2 years agoFirst of all, I’d like to extend my thanks to the organizers for putting together such a good and interesting competition, and for providing a solid baseline code.

I also want to encourage other top teams to share their solutions. It’s through this sharing of knowledge and techniques that we all grow and learn new things, which was one of the main reasons we participated in the first place.

So, let’s dive into our solution:

We began by assembling a dataset of around 300,000 images sourced from Airbnb. These images underwent processing to extract features that allowed us to distinguish between interior and outdoor scenes. We then filtered out only the indoor images. For each indoor image, we generated segmentation masks and depth maps. Additionally, we created an empty room image for each using an inpainting pipeline.

With the llava1.5 model, we generated image descriptions. Following the creation of our dataset, we trained two custom Control nets based on depth and segmentation-conditioned images. During inference, we utilized the inpainting pipeline along with the two custom Control nets and the IP Adapter.

For those interested in replicating our work, the full code is available on GitHub, and here is a submission code.

Furthermore, our final best model can be accessed and played with here.

If you find our model space and solution intriguing, we would be grateful for likes on Hugging Face as well as on GitHub. Your support means a lot to us.