Location

US

US

Badges

Activity

Challenge Categories

| Participant | Rating |

|---|

| Participant | Rating |

|---|

-

theSureThing Novartis DSAI ChallengeView

Novartis DSAI Challenge

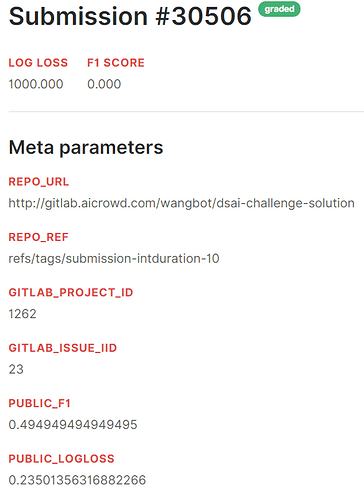

Log Loss and F1 on Leaderboard different from "PUBLIC_F1" and "PUBLIC_LOGLOSS"

Almost 6 years agoThanks @shivam. That was just it. Works now.

RAW DATA now available in shared folder

About 6 years agoThanks for making the raw data available in this more approachable manner. Is there a way to share the data dictionary of the raw data, or instructions on how to get the data dictionary through Informa API?

I ask because the master data dictionary provided under “Resources” is not complete. Many variables’ descriptions are missing or not informative. Examples:

-

“strTerminationReason”: what does is mean when this variable is “” (empty string)?

-

“TherapyDescription”, “strStudyDesign”, “strPrimaryEndpoint”, “strPatientPopulation”, “DrugDeliveryRouteDescription”: all missing variable description.

It’d be helpful if we could know how are these variables collected: free text in some trial registry? Or hopefully some more structured meta-data with which we could engineer some features out of the long text values. e.g. we want to create a new endpoint category: biomarker, survival, etc.

-

“intpriorapproval”: missing description. What do “[]” and “[0]” mean?

-

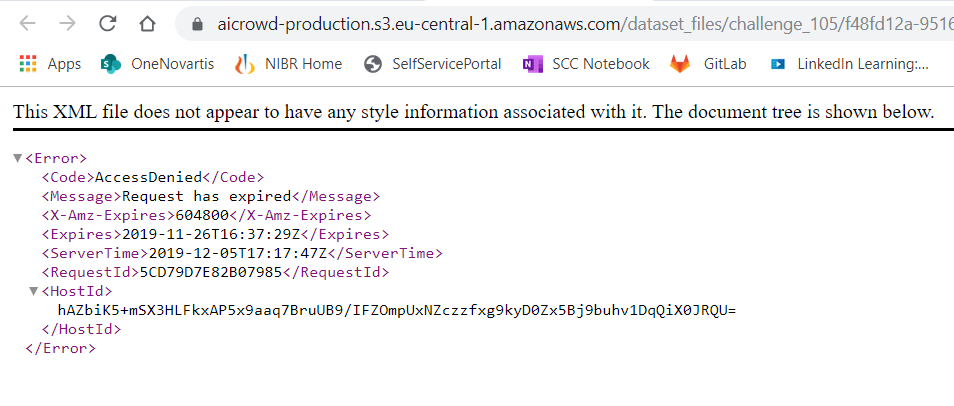

“drugCountryName”: missing description, not in the script documentation, and are all a big string separated by “|” for all rows (see screenshot below)

Copy/Paste into the workspace example:

About 6 years agoThis link seems to direct to an XML file and it says “AccessDenied”.

What is being evaluated during submission?

About 6 years agoIs the predict.R or predict.py script run in the queue when we submit a solution through SSH? If that’s the case, how do we know where is the test dataset in the evaluation environment?

Also, is there a way to get the log file when the GitLab issue says evaluation is failed to debug what might have been wrong?

Thanks.

How to use conda-forge or CRAN for packages in evaluation?

Almost 6 years ago@bjoern.holzhauer Hi Bjoern were you successful in using

glmnetduring evaluation by adding- r-glmnet 2.0_16to the environment.yml file? Thx.