Activity

Challenge Categories

Challenges Entered

Audio Source Separation using AI

Latest submissions

See All| graded | 236036 | ||

| failed | 236033 | ||

| graded | 220432 |

Latest submissions

See All| graded | 156783 | ||

| failed | 156779 | ||

| failed | 156748 |

Music source separation of an audio signal into separate tracks for vocals, bass, drums, and other

Latest submissions

See All| graded | 220432 | ||

| graded | 220426 | ||

| graded | 220405 |

Source separation of a cinematic audio track into dialogue, sound-effects and misc.

Latest submissions

See All| graded | 236036 | ||

| failed | 236033 | ||

| graded | 220319 |

| Participant | Rating |

|---|---|

sunglin_yeh

sunglin_yeh

|

0 |

kinwaicheuk

kinwaicheuk

|

0 |

csteinmetz1

csteinmetz1

|

0 |

alina_porechina

alina_porechina

|

0 |

owlwang

owlwang

|

0 |

| Participant | Rating |

|---|---|

faroit

faroit

|

0 |

sunglin_yeh

sunglin_yeh

|

0 |

kinwaicheuk

kinwaicheuk

|

0 |

Wang_Yu_Li

Wang_Yu_Li

|

0 |

csteinmetz1

csteinmetz1

|

0 |

-

Kazane_Ryo_no_Danna Music Demixing Challenge ISMIR 2021View

-

aim-less Sound Demixing Challenge 2023View

Cinematic Sound Demixing Track - CDX'23

Post-challenge discussion

Over 2 years agoThank @XavierJ, for opening the thread.

I’m Chin-Yun Yu, the team leader of aim-less from C4DM at the Queen Mary University of London.

We are currently organising our codebase and will make it publicly available soon.

Some of our key findings throughout the challenge:

Leaderboard A, CDX

Since the aim of the challenge is hacking the evaluation metric, we use the negative SDR as the loss function, which significantly improves the score by at least 1 dB compares to L1 loss. We also found the speech seems to be always panned in the centre in the test set after we used the average of the two predicted channels and found it improved the score slightly. Our final model consists of an HDemucsV3 and a BandSplitRNN (we implemented it based on the paper and would love to compare it with @XavierJ’s version) that only predicts the music and the predictions are the average of the two.

Leaderboard A, MDX

The strategy we used at the end was listening through all the label noise tracks and labelling the clean tracks for training. We trained an HDemucsV3 with data augmentation on those clean tracks. My teammate @christhetree would probably want to add more details to this. We missed the team-up deadline, but I was in charge of all the submissions in the last two weeks, so we didn’t exploit any extra quota.

MDX Leaderboard B 1st place solution (kuielab team)

Over 2 years agoThanks for sharing. Excellent stuff.

Submission Times

Over 2 years agoAdd on to this, my team actually had ten submissions quota per day, at least before last week.

It changed to five during the last week.

Submissions are being stuck on "Preparing the cluster for you" for a long time

Almost 3 years agoHi @mohanty, could you also help me kill submissions #218648, #218669, and #218872?

They have been hanging there for days and I don’t want to waste my quota on that.

Submissions are being stuck on "Preparing the cluster for you" for a long time

Almost 3 years agoSame here on the CDX track

Question: Access the value stored in gitlab CI/CD variables when building the environment

Almost 3 years agoAfter investigation, it seems like setting project variables is useless.

Question: Access the value stored in gitlab CI/CD variables when building the environment

About 3 years agoHi!

I have a question regarding the CI/CD feature of GitLab for submission.

My submission requires a private package that a private token can only access.

I don’t want to commit the token into git, so I set it as an variable in my project settings, then wrote a postBuild script to install the package.

However, the script couldn’t access the token value during post-build (specifically, the environment variable I set in the settings is unavailable.)

Is there any other way to cope with this?

Can we customize CI/CD variables for this challenge?

Best,

Chin-Yun

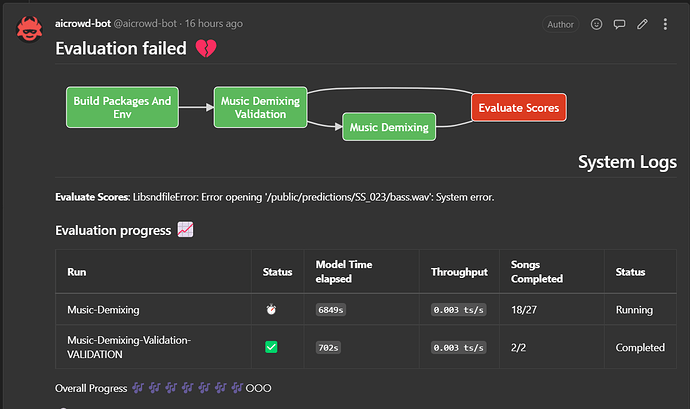

Evaluation failed due to system error

About 3 years agoA few of my submissions failed at the evaluation stage and encountered this error:

Is there any way to avoid this problem? It seems like a problem in the submission system.

Music Demixing Challenge ISMIR 2021

📹 Town Hall Recording | Participant Presentations

Over 4 years agoThanks for the archive!

My zoom crashed at the beginning, so I actually didn’t know what’s going on when I returned.  Also feel sorry for my mic issues.

Also feel sorry for my mic issues.

Anyway, thanks everyone for listening our presentation. I really enjoyed this event especially with so many excellent participants and informative talks, which makes me look forward to the ISMIR workshop.

BTW, I have made our training code public on github. If you’re interesting in our model, feel free to give it a try!

🎉 The final leaderboard is live

Over 4 years agoCongrats to all the winner!

My team have tried hard in the final week but still cannot beat the 3rd place in leaderboard A  .

.

Thank you guys for hosting this interesting challenge

.

.

Evaluation timed out error encountered

Over 4 years agoTwo of my submissions today all failed at the last stage and recieve Evaluation timed out. Can you help me re-trigger the evaluation? It seems like a server side error.

https://gitlab.aicrowd.com/yoyololicon/music-demixing-challenge-starter-kit/-/issues/33

https://gitlab.aicrowd.com/yoyololicon/music-demixing-challenge-starter-kit/-/issues/32

Questions about leaderboards A and B

Over 4 years agoHi @StefanUhlich @shivam,

I agree with @agent.

I think it’s not state very clear in the challenge rules. All of my submissions before are trained in this way, but I didn’t set external_dataset_used to true. I believe many participants have also done this but not knowing it.

Should these submissions need to be disquailified from leaderboard A? If so, how to?

Build image failed with "pod deleted during operation"

Over 4 years agoI submitted again and meet the same problem.

https://gitlab.aicrowd.com/yoyololicon/music-demixing-challenge-starter-kit/-/issues/17

Seems like the runner was killed with no reason.

Build image failed with "pod deleted during operation"

Over 4 years agoHi, my submission today was failed at the build image stage. The logs didn’t show any error but this line of message pod deleted during operation. Is this a server side problem?

My submission:

http://gitlab.aicrowd.com/yoyololicon/music-demixing-challenge-starter-kit/-/issues/16

OSError when downloading files from google drive inside `prediction_setup`

Over 4 years agoI’m seeking for a way to dynamically load the trained model from web to save my LFS usage.

I end up put my model weights on google drive, and use python urllib and http package to download it when running the CI runner, but I received the following errors:

===========================

Partial run on init: None

All music names on init: ['SS_018', 'SS_008']

Partial run on init: None

All music names on init: ['SS_018', 'SS_008']

Partial run on init: None

All music names on init: ['SS_008', 'SS_018']

Partial run on init: None

All music names on init: ['SS_008', 'SS_018']

Partial run on init: None

All music names on init: ['SS_008', 'SS_018']

Traceback (most recent call last):

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1317, in do_open

encode_chunked=req.has_header('Transfer-encoding'))

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1229, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1275, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1224, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1016, in _send_output

self.send(msg)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 956, in send

self.connect()

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1384, in connect

super().connect()

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 928, in connect

(self.host,self.port), self.timeout, self.source_address)

File "/srv/conda/envs/notebook/lib/python3.7/socket.py", line 727, in create_connection

raise err

File "/srv/conda/envs/notebook/lib/python3.7/socket.py", line 716, in create_connection

sock.connect(sa)

OSError: [Errno 99] Cannot assign requested address

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/aicrowd/evaluator/music_demixing.py", line 116, in run

self.evaluation()

File "/home/aicrowd/evaluator/music_demixing.py", line 92, in evaluation

self.prediction_setup()

File "/home/aicrowd/test_unet.py", line 107, in prediction_setup

save_path="./models", save_name="unet.pth"

File "/home/aicrowd/remote.py", line 192, in download_large_file_from_google_drive

resp = opener.open(url)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 525, in open

response = self._open(req, data)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 543, in _open

'_open', req)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 503, in _call_chain

result = func(*args)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1360, in https_open

context=self._context, check_hostname=self._check_hostname)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1319, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [Errno 99] Cannot assign requested address>

Traceback (most recent call last):

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1317, in do_open

encode_chunked=req.has_header('Transfer-encoding'))

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1229, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1275, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1224, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1016, in _send_output

self.send(msg)

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 956, in send

self.connect()

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 1384, in connect

super().connect()

File "/srv/conda/envs/notebook/lib/python3.7/http/client.py", line 928, in connect

(self.host,self.port), self.timeout, self.source_address)

File "/srv/conda/envs/notebook/lib/python3.7/socket.py", line 727, in create_connection

raise err

File "/srv/conda/envs/notebook/lib/python3.7/socket.py", line 716, in create_connection

sock.connect(sa)

OSError: [Errno 99] Cannot assign requested address

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "predict.py", line 27, in <module>

submission.run()

File "/home/aicrowd/evaluator/music_demixing.py", line 122, in run

raise e

File "/home/aicrowd/evaluator/music_demixing.py", line 116, in run

self.evaluation()

File "/home/aicrowd/evaluator/music_demixing.py", line 92, in evaluation

self.prediction_setup()

File "/home/aicrowd/test_unet.py", line 107, in prediction_setup

save_path="./models", save_name="unet.pth"

File "/home/aicrowd/remote.py", line 192, in download_large_file_from_google_drive

resp = opener.open(url)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 525, in open

response = self._open(req, data)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 543, in _open

'_open', req)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 503, in _call_chain

result = func(*args)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1360, in https_open

context=self._context, check_hostname=self._check_hostname)

File "/srv/conda/envs/notebook/lib/python3.7/urllib/request.py", line 1319, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [Errno 99] Cannot assign requested address>

Is this a problem relates to the CI environment used when evaluating?

I can run prediction succesfully inside docker using the builded image (with aicrowd-repo2docker) on my local machine.

My submission doesn't trigger evaluation

Over 4 years agoHi, I follow the instructions in the READMD.md and push a tag “submission-v0.1” to my gitlab repo, but nothing happens, the issue page is still empty. I can’t see any runner that was running my submission.

Which step can I did wrong?

The commandline output after pushing the tag to remote:

Locking support detected on remote "aicrowd". Consider enabling it with:

$ git config lfs.https://gitlab.aicrowd.com/yoyololicon/music-demixing-challenge-starter-kit.git/info/lfs.locksverify true

Total 0 (delta 0), reused 0 (delta 0)

remote:

remote: #///( )///#

remote: //// /// ////

remote: ///// ////////// ////

remote: /////////////////////////

remote: /// /////////////////////// ///

remote: ///////////////////////////////////

remote: /////////////////////////////////////

remote: )////////////////////////////////(

remote: ///// /////

remote: (/////// /// /// //////)

remote: /////////// /////// //////////

remote: (///////////////////////////////////////)

remote: ///// /////

remote: /////////////////

remote: ///////////

remote:

remote:

remote: Hello yoyololicon!

remote: Here are some useful links related to the submission you made:

remote:

remote: Your Submissions: https://www.aicrowd.com/challenges/music-demixing-challenge-ismir-2021/submissions?my_submissions=true

remote: Challenge Page: https://www.aicrowd.com/challenges/music-demixing-challenge-ismir-2021

remote: Discussion Forum: https://discourse.aicrowd.com/c/music-demixing-challenge-ismir-2021

remote: Leaderboard: https://www.aicrowd.com/challenges/music-demixing-challenge-ismir-2021/leaderboards

remote:

To gitlab.aicrowd.com:yoyololicon/music-demixing-challenge-starter-kit.git

* [new tag] submission-v0.1 -> submission-v0.1

Continue training on provided pretrained models

Over 4 years agoIs it ok to continue training on the pretrained baseline models and submit it as entry?

If so, which leaderboard should it belongs? Did the provided baseline use external datasets?

London, GB

London, GB

CDX Leaderboard A first place solution (aim-less)

Over 2 years agoHi all, here is the code base for reproducing our top submission on CDX Leaderboard A, also the 4th place solution on MDX Leaderboard A.