LifeCLEF 2019 Bird Recognition

HiddenBird species recognition in soundscapes

Note: Do not forget to read the Rules section on this page

The test data is available. Participants can now submit their runs.

Challenge description

The goal of the challenge is to detect and classify all audible bird vocalizations within the provided soundscape recordings. Each soundscape is divided into segments of 5 seconds. Participants should submit a list of species associated with probability scores for each segment.

Data

The training data contains ~50,000 recordings taken from xeno-canto.org and covers 659 common species from North and South America. We limited the available amount of recordings to a minimum of 15 and a maximum of 100 audio files per species (each file contains a variable number of calls). All recordings vary in quality, sampling rate and encoding. The training data also includes extensive metadata (JSON files) for each recording, providing information on recording location, type of vocalization, presence/absence of background species, etc.

The test data contains soundscape recordings from two regions: (i) ~280h of data recorded in Ithaca, NY, United States and (ii) ~4h of data recorded in Bolívar, Colombia. Each test recording contains multiple species and overlapping vocalizations. Some recordings (especially nighttime recordings) might not contain any bird vocalization.

The validation data will be provided as a complementary resource in addition to the training data and can be used to locally validate the system performance before the test data is released. The validation data includes (i) ~72h of data recorded in Ithaca, NY, United States and (ii) ~25min of data recorded in Bolívar, Colombia. The selection of the validation recordings can be considered representative of the test data and features metadata that provides detailed information on the recording settings. However, not every species from the test data might be present in the validation data.

The test and validation data includes a total of ~80,000 expert annotations provided by the Cornell Lab of Ornithology and Paula Caycedo.

Submission instructions

Valid submissions have to include all test recordings and the corresponding predictions independent of the recording location. Each line should contain one prediction item. Each prediction item has to follow this format:

< MediaId;TC1-TC2;ClassId;probability>

MediaId: ID of each test recording; can be found in XML metadata.

TC1-TC2: Timecode interval with the format of hh:mm:ss and a length of exactly 5 seconds starting with zero at the beginning of each file (e.g.: 00:00:00-00:00:05, then 00:00:05-00:00:10).

ClassId: ID of each species class; can be found in metadata and subfolder names.

Probability: Between 0 and 1; decreasing with the confidence in the prediction.

A valid submission item would look like this:

SSW003;00:13:25-00:13:30;cedwax;0.87345

You can find a sample run as part of the validation data.

Each participating group is allowed to submit up to 10 runs obtained from different methods. Semi-supervised, interactive or crowdsourced approaches are allowed, but will be compared independently from fully automatic methods. Any human assistance in the processing of the test queries needs to be clearly indicated in the submitted runs.

Participants are allowed to use any of the provided complementary metadata in addition to the audio data. Participants are also allowed to use additional external training data, but at the conditions that

(i) the experiment is entirely re-producible, i.e. that the used external resource is clearly referenced and accessible to any other research group in the world and

(ii) participants submit at least one run without external training data so that we can study the contribution of these additional resources.

Participants are also allowed to use the provided validation data for training under the conditions that

(i) participants submit at least one run that only uses training data and

(ii) clearly state when validation data was used for training in any of the additional runs.

As soon as the submission is open, you will find a “Create Submission” button on this page (next to the tabs)

Citations

Information will be posted after the challenge ends.

Evaluation criteria

The used metric will be the classification mean Average Precision (c-mAP), considering each class c of the ground truth as a query. This means that for each class c, we will extract from the run file all predictions with ClassId=c, rank them by decreasing probability and compute the average precision for that class. We will then take the mean across all classes. More formally:

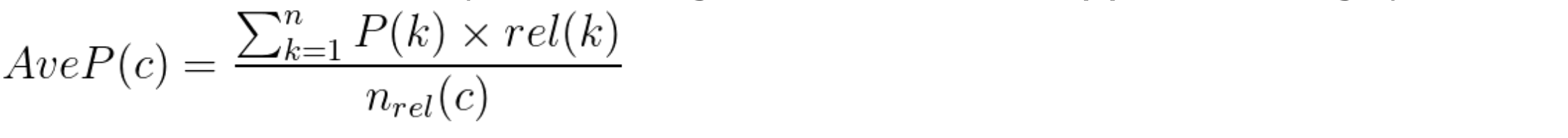

where C is the number of species in the ground truth and AveP(c) is the average precision for a given species c computed as:

where k is the rank of an item in the list of the predicted segments containing c, n is the total number of predicted segments containing c, P(k) is the precision at cut-off k in the list, rel(k) is an indicator function equaling 1 if the segment at rank k is a relevant one (i.e. is labeled as containing c in the ground truth) and nrel is the total number of relevant segments for c.

Resources

Contact us

- Discussion Forum : https://www.crowdai.org/challenges/lifeclef-2019-bird-soundscape/topics

We strongly encourage you to use the public channels mentioned above for communications between the participants and the organizers. In extreme cases, if there are any queries or comments that you would like to make using a private communication channel, then you can send us an email at :

- Stefan Kahl: stefan[DOT]kahl[AT]informatik[DOT]tu-chemnitz[DOT]de

- Alexis Joly: alexis[DOT]joly[AT]inria[DOT]fr

- Hervé Goëau: herve[DOT]goeau[AT]cirad[DOT]fr

More information

You can find additional information on the challenge here: https://www.imageclef.org/BirdCLEF2019

Prizes

LifeCLEF 2019 is an evaluation campaign that is being organized as part of the CLEF initiative labs. The campaign offers several research tasks that welcome participation from teams around the world. The results of the campaign appear in the working notes proceedings, published by CEUR Workshop Proceedings (CEUR-WS.org). Selected contributions among the participants, will be invited for publication in the following year in the Springer Lecture Notes in Computer Science (LNCS) together with the annual lab overviews.

Datasets License

Participants

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email