MARLO 2018

HiddenMulti-Agent Reinforcement Learning in Minecraft

Please consider citing the following paper, if you find this work useful : Diego Perez-Liebana, Katja Hofmann, Sharada Prasanna Mohanty, Noburu Kuno, Andre Kramer, Sam Devlin, Raluca D. Gaina: “The Multi-Agent Reinforcement Learning in MalmÖ (MARLÖ) Competition”, 2019, Challenges in Machine Learning (NIPS Workshop), 2018 http://arxiv.org/abs/1901.08129

We are accepting submissions for Round 2. Please feel free to make a submission using the instructions here.

Due to the delay of opening submission system, we extended the Entry Period to December 31, 2018. Please take a look at the part of Winner Selection for detail.

What is the Challenge?

Learning to Play: The Multi-Agent Reinforcement Learning in MalmO Competition (“Challenge”) is a new challenge that proposes research on Multi-Agent Reinforcement Learning using multiple games. Participants would create learning agents that will be able to play multiple 3D games as defined in the MalmO platform. The aim of the competition is to encourage AI research on more general approaches via multi-player games. For this, the Challenge will consist of not one but several games, each one of them with several tasks of varying difficulty and settings. Some of these tasks will be public and participants will be able to train on them. Others, however, will be private, only used to determine the final rankings of the competition.

Organizer (Microsoft, Queen Mary University of London and crowdAI) will make the Challenge tasks and sample code available via GitHub on or before the Challenge start date. Entries will be judged by Organizer according to the criteria outlined in the “Winner Selection” section below.

The following prizes will be awarded: (1) the top 7 team will be awarded a MARLO Travel Grant with a maximum value of $2,500 USD to join a relevant academic conference or workshop, additionally, the 1st winning team will be awarded a second MARLO Travel Grant with a maximum value of $2,500 USD to join the Applied Machine Learning Days 2019 and (2) three winning teams will be awarded Microsoft Azure Sponsorship with a maximum value of $10,000 USD for the 1st place, $5,000 USD for the 2nd place and $3,000 USD for the 3rd place. Please see the “Challenge Prizes” section below for further details.

What are the start and end dates?

The Challenge starts at 00:01 Pacific Standard Time, on July 27, 2018, and the Qualify Round ends at 23:59 Pacific Standard Time, on December 31, 2018 (“Entry Period”). Entries must be received within the Entry Period to be eligible.

The Kick-off Tournament is held in MARLO workshop of AIIDE’18, the 14th AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment held at the University of Alberta in Edmonton, AB, Canada on November 14, 2018.

The Final Tournament will be held offline in a week after deadline to decide winners.

Games and Tasks

One of the main features of this competition is that agents play in multiple games. Therefore, several tasks are proposed for this contest. For the purpose of this document and the competition itself, we define:

-

Game: each one of the different scenarios in which agents play.

-

Task: each instance of a game. Tasks, within a game, may be different to each other in terms of level layout, size, difficulty and other game-dependent settings.

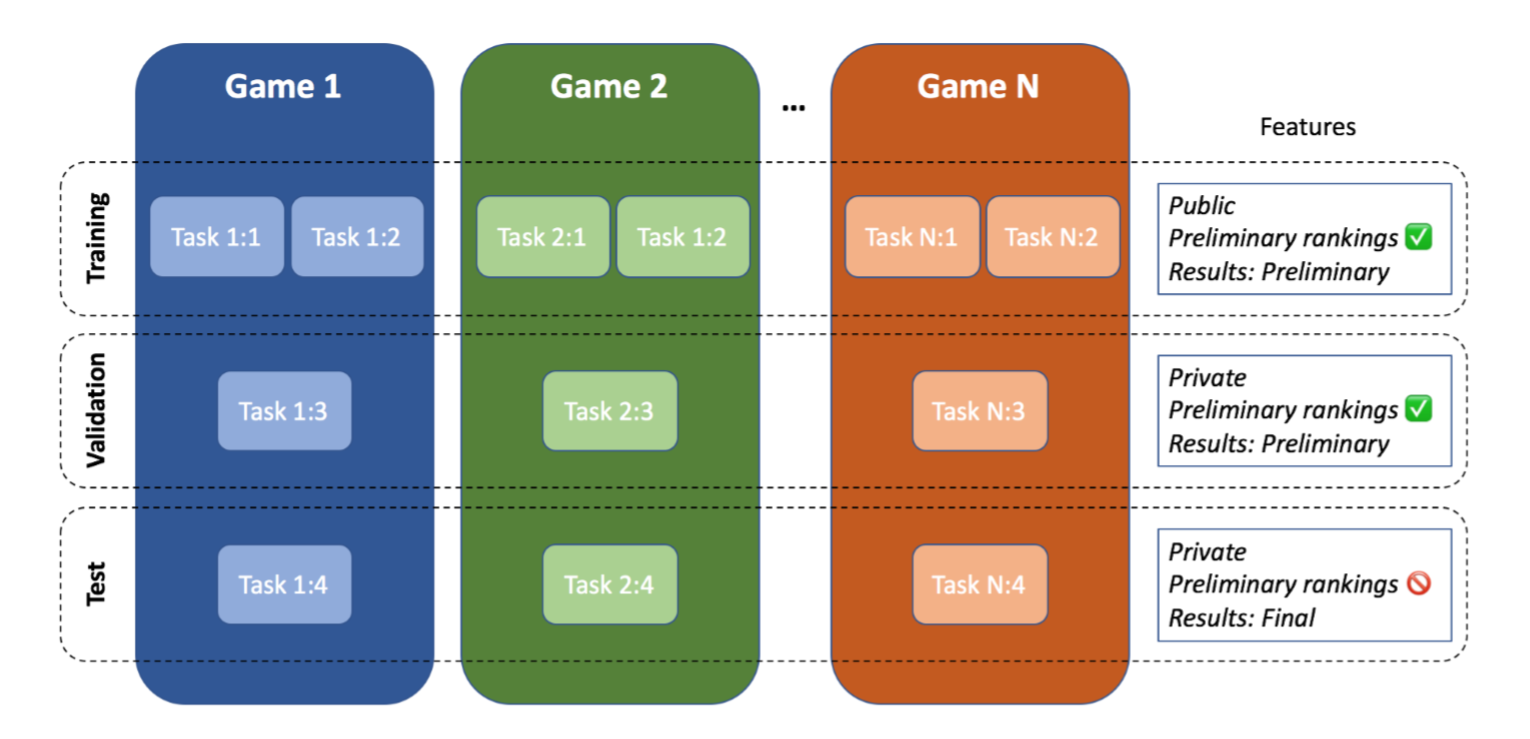

Figure 1: Sketch of how games and tasks are organized in the Challenge.

Figure 1: Sketch of how games and tasks are organized in the Challenge.

As can be seen, tasks will be of public nature and accessible by the participants, while others are secret and will be used to evaluate the submitted entries at the end of the competition.

Tasks are distributed across sets:

Figure 2: On the left: Build Battle, where players need to recreate a structure (in this case, the structure is shown on the ground). On the right: Pig Chase, where players collaborate to corner the pig.

Figure 2: On the left: Build Battle, where players need to recreate a structure (in this case, the structure is shown on the ground). On the right: Pig Chase, where players collaborate to corner the pig.

Competition

To participate in the Challenge, you will first need to register as a user on crowdai.org and entry the Challenge. Then you start playing with the Challenge by simply cloning the starter-kit of the Challenge on GitHub. Your participation in the Challenge happens through a self-hosted GitLab instance of crowdAI. You need to create a “private” repository with your initial code (or simply code copied from the starter kit). You can make a submission by creating a new git tag and pushing the tag on your repository. crowdAI bot identifies your push tag, automatically clone your repository into a docker image and run an evaluation. After evaluation, the leaderboard is updated. You can find more detail about the actual submission process in the starter-kit.

Your code (and also the corresponding issues for every submission) will stay private during the Challenge. You are encouraged to add an opensource license of your choice. At the end of the Challenge, you will be provided a time period, within which you can decide to keep your code private and not making it publicly available. Else your repository will be made public by default. If the participant has not already added a License to the repository, a MIT license will be automatically added to the repository before making it public. Those who object to having their code made public, will be excluded from the automated process of making all the Challenge specific repositories public.

We are not responsible for entries that we do not receive for any reason, or for entries that we receive but are not decipherable for any reason. We will automatically disqualify any incomplete or illegible entries.

Evaluation criteria

## Winner Selection

Qualify Round

Round 1 – until October 21, 2018

- Warm up round. Submitted agent to work for a single task.

Round 2 – October 21, 2018 – December 31, 2018

- Submitted agent to work with fixed random agent as its opponent.

At the close of the Entry Period (on December 31, 2018), the Organizer will select 8-32 qualifying teams from eligible entries based upon the overall performance on the Competition tasks (i.e. game score) to invite them to the Final Tournament.

The decisions of the Organizer are final and binding. If we do not receive a sufficient number of entries meeting the entry requirements, we may, at our discretion, select fewer qualifying teams to the tournament.

Kick-off Tournament

Kick-off Tournament is organized in a form of live competition at the workshop in AIIDE 2018. A few Invited teams are broken into groups. Teams on each group play among themselves as the same format with Final Tournament as below.

Final Tournament

The Final Tournament is organized offline in a week after the deadline of December 31, 2018. The 8-32 invited teams are broken into 8 groups. Teams on each group play among themselves (in which we here called a “league”) to determine a ranking, and the top 2 teams of these players progresses to the next round. Each league (P players in a group) is played across the same N games, with T repetitions played per game. Each game has its own leaderboard of agents ranked by the quality of the players. These leaderboards award ranking points to the entries, following a Formula 1-like scheme: 25 points for the 1st ranked entry, 18 for the 2nd, 15, 12, 10, 8, 6, 4, 2 and 1 for positions in the ranking from 3rd to 10th respectively. No points are awarded for positions 11th and below. The winner of the league is determined by adding all ranking points obtained in the different games of the challenge.

Figure 3: MARLO Final Tournament

Figure 3: MARLO Final Tournament

If any team member is a potential winner and is 18 years of age or older, but is considered a minor in their place of legal residence, we may require that person’s parent or legal guardian to sign all required forms on their behalf. If that person does not complete the required forms as instructed and/or return the required forms within the time period listed on the winner notification message, we may disqualify the relevant team and select a runner-up.

If your team is confirmed as a winner of the Challenge:

-

It may not designate another party as the winner. If your team is unable or unwilling to accept its prize, we may award it to a runner-up; and

-

Team members will be solely responsible for any taxes that may be payable in connection with the award of any prize.

Resources

Please visit the starter-kit page first for our comprehensive instruction to start this competition.

- Starter-kit: https://github.com/crowdAI/marLo

The original resource of Project Malmo platform is available on the GitHub.

- GitHub: https://github.com/Microsoft/malmo

- Project Malmo website: https://www.microsoft.com/en-us/research/project/project-malmo/

Follow twitter for the latest information of Project Malmo

- Twitter: @Project_Malmo

Contact Us

- Gitter Channel : https://gitter.im/Microsoft/malmo

- Discussion Forum : https://www.crowdai.org/challenges/marlo-2018/topics

We strongly encourage you to use the public channels mentioned above for communications between the participants and the organizers. In extreme cases, if there are any queries or comments that you would like to make using a private communication channel, then you can send us an email at:

Prizes

MARLO Travel Grant Prize

The top 7 teams (3 Progress Award at Kick-off Tournament and the rest of 4 Award at Final Tournament) will be awarded a MARLO Travel Grant with a maximum value of $2,500 USD for the team members to join a relevant conference to publish their competition result. The conference is within a year after finishing the final tournament and it is decided later based on mutual agreement between Organizer and the winning team. Additionally, the 1st winning team will be awarded a second MARLO Travel Grant with a maximum value of $2,500 USD to join the Applied Machine Learning Days 2019. The winner of Progress Award at Kick-off Tournament and the winner of Award at the Final Tournament can be overlapped as long as the Organizer identify some improvements about the submission made by the relevant teams. Organizer will reimburse the following reasonably and necessarily incurred travel expenses of team members in attending the conference: (1) economy class roundtrip airfares from your nearest airport to the city of the conference; and (2) accommodation up to 3 days. Organizer will not cover meals during your attendance at the conference.

Microsoft Azure Sponsorship Prize

Three teams will each win a Microsoft Azure Sponsorship at Final Tournament with a maximum value of $10,000 USD for the 1st place, $5,000 USD for the 2nd place and $3,000 USD for the 3rd place. The Microsoft Azure Sponsorship will be awarded to the team lead nominated by the relevant team. Team leads will be solely responsible for the allocation of the grant between the relevant team members. The award of a Microsoft Azure Sponsorship will be subject to each team member agreeing to comply with such terms and conditions of use or other requirements that Microsoft may impose.

Azure for Students

Azure for Students gets you started with $100 in Azure credits to be used within the first 12 months plus select free services (subject to change) without requiring a credit card at sign-up. This is not mandatory for the Challenge. We recommend you take a look at the portal site if you would get additional computing resources: https://azure.microsoft.com/en-us/free/students/.

Winner’s List and Sponsor

We will notify the winning teams by November 17, 2018. We will also post a leaderboard detailing the top 32 team entries online on crowdAI and under Microsoft Research website. Please note that we will only post team names and we will not post the names of any individual team members. This list will remain posted for a period of at least 12 calendar months.

If your team’s entry is in a public repository we may also post a link to that repository. Please check your GitLab and GitHub settings to ensure that this will not result in individual team members becoming identifiable unless this is intended by the individual(s) in question.

This promotion is sponsored by Microsoft Corporation, One Microsoft Way, Redmond, WA 98052-6399, USA.

Timeline

- July 27th, 2018: Competition Open

- October 21st, 2018: Qualifying Round 1 submission deadline

- November 14th, 2018: Kick-off Tournament

- December 31st, 2018: Qualifying Round 2 submission deadline

- Early January 2019: Final Tournament

Organizing Team

The organizing team comes from multiple groups — Queen Mary University of London, École Polytechnique Fédérale de Lausanne, and Microsoft Research.

The organizing team consists of:

- Diego Perez-Liebana (Queen Mary University of London)

- Raluca D. Gaina (Queen Mary University of London)

- Daniel Ionita (Queen Mary University of London)

- Sharada Prasanna Mohanty (École Polytechnique fédérale de Lausanne)

- Sam Devlin (Microsoft Research)

- Andre Kramer (Microsoft Research)

- Sean Kuno (Microsoft Research)

- Katja Hofmann (Microsoft Research)

Sponsors

- Microsoft

Datasets License

Participants

Leaderboard

| 01 |

|

-8.300 |

| 02 |

|

-23.600 |

| 03 |

|

-23.640 |

| 04 |

|

-24.700 |

| 05 |

|

-25.400 |

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email