NeurIPS 2019 : Disentanglement Challenge

Disentanglement: from simulation to real-world

Download the starter kit to get started immediately. Following the instructions there will download all the necessary datasets and code. The starter kit itself can already be used to make a valid submission, hence providing an easy entry-point.

To stay up to date with further announcements you may also follow us on twitter.

NeurIPS2019 Disentanglement Challenge

Bringing Disentanglement to the Real-World!

The success of machine learning algorithms depends heavily on the representation of the data. It is widely believed that good representations are distributed, invariant and disentangled [1]. This challenge focuses on disentangled representations where explanatory factors of the data tend to change independently of each other. Independent codes have been proven to be useful in different areas of machine learning such as causal inference [2], reinforcement learning [3], efficient coding [4], and neuroscience [5].

Since real-world data is notoriously costly to collect, many recent state-of-the-art disentanglement models have heavily relied on synthetic toy data-sets [6, 7].

While synthetic datasets are cheap, easy to generate and independent generative factors can be controlled, the performance of state-of-the-art unsupervised disentanglement learning on real-world data is unknown.

Given the growing importance of the field and the potential societal impact in the medical domain or fair decision making [e.g. 8, 9, 10], it is high time to bring disentanglement to the real-world:

Stage 1: Sim-to-real transfer learning - design representation learning algorithms on simulated data and transfer them to the real world.

Stage 2: Advancing disentangled representation learning to complicated physical objects.

More details for each stage are provided below.

Summary

Contestants can participate by implementing a trainable disentanglement algorithm and submitting it to the evaluation server. Participants will have to submit their code to AIcrowd which will be evaluated via the AIcrowd evaluators to come up with their score (as described below).

The submitted method will access a dataset on the evaluation server. The challenge objective is to let the method automatically determine the dataset’s independent factors of variation in an unsupervised fashion.

In order to prevent overfitting, the dataset used to compute the final scores is kept completely hidden from participants until the respective challenge stage is terminated. Participants are encouraged to find robust disentanglement methods that work well without the need for manual adjustments.

Additionally, participants are required to submit a three-page report on their method to OpenReview. Detailed requirements for the report are given below.

The final score used to rank the participants and determine winners is a mixture of several disentanglement metrics. Details about the evaluation procedure can be found below.

The library disentanglement_lib [Link] provides several datasets, disentanglement methods and evaluation metrics, which gives participants an easy way to get started.

A public leaderboard shows rankings of the methods submitted until then (but not taking into account the quality of the report). The final ranking can differ substantially from the one on the public leaderboard because a different dataset will be used to determine the score.

Stages

The challenge is split into two stages. In each stage there are three different datasets [6] (all of which include labels):

- (1) a dataset which is publicly available,

- (2) a dataset which is used for the public leaderboard,

- (3) a dataset which is used for the private leaderboard.

The Github repository [Link] contains the necessary information to use dataset (1).

The participants may use dataset (1) to develop their methods. Each method which is submitted will be retrained and evaluated on dataset (2) as well as dataset (3).

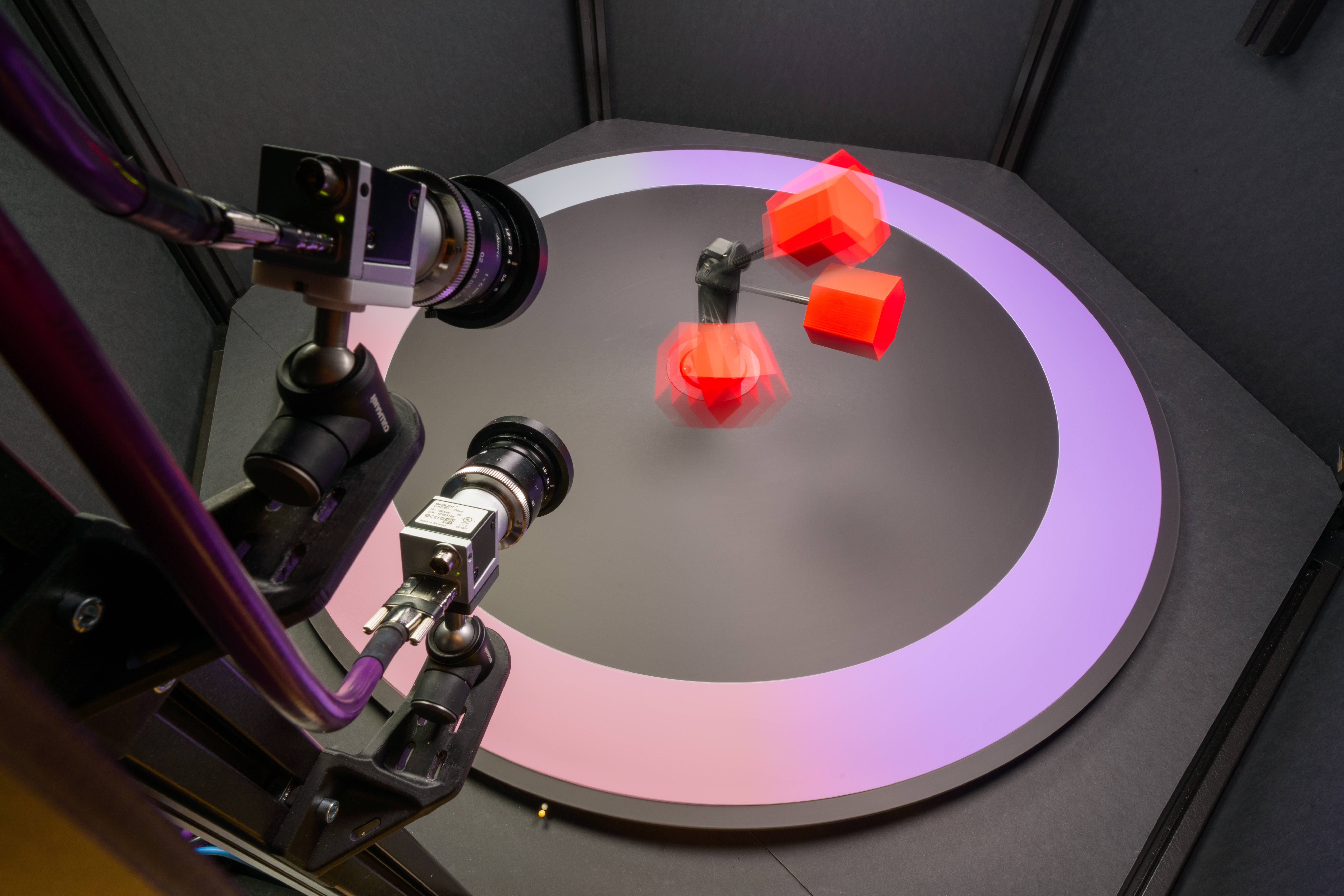

In the first stage, the goal is to transfer from simulated to real images. Dataset (1) will correspond to simplistic simulated images, (2) to more realistically simulated images and (3) will consist of real images of the same setup.

Simple simulated data

In the second stage, the goal is to transfer to unseen objects. (1) will consist of all datasets used in the first phase, (2) will consist of realistically simulated images of objects which are not included in (1) and (3) will consist of real images of those unseen objects.

Note: while the publicly released dataset is ordered according to the factors of variation, the private datasets will be randomly permuted prior to training. This means that at training time the images will be effectively unlabeled.

Timeline

- June 28th, 2019: Stage 1 starts

- August 6th, 2019, 11:59pm AoE Submission deadline for methods, Stage 1

- ~~August 16th, 2019, 11:59pm AoE~~ September 23rd. 2019 11:59 AM GMT: Submission deadline for reports, Stage 1

- September 2nd, 2019: Stage 2 starts.

- October 1st, 2019, 11:59pm AoE Submission deadline for methods, Stage 2

- ~~October 23rd, 2019, 11:59pm GMT~~ November 13th, 2019, 11:59pm AoE : Submission deadline for reports, Stage 2

Writing a Report

Contestants have to provide a three-page document (with additionally up to 10 pages of references and supplementary material) providing all the necessary details of their approach on the challenge venue on Openreview. This guarantees the reproducibility of the results and the transparency needed to advance the state of the art for learning disentangled representations. The report has to be submitted according to the deadlines provided above.

Participants are required to use a LaTeX template we provide to prepare the reports, changing formatting is not allowed. The template will be released before Stage 1 ends.

The report has a maximum length of three pages with an appendix of ten pages. However, reviewers are not required to consider the appendix and all essential information must be contained in the main body of the report. Submissions must fulfill some essential requirements in terms of clarity and precision: The information contained in the report should be sufficient for an experienced member of the community to reimplement the proposed method (including hyperparameter optimization) and reviewers may check coherence between the report and the submitted code.

Reports which do not satisfy those requirements will be disqualified along with the corresponding methods.

We encourage all participants to actively engage in discussions on OpenReview. While not a formal challenge requirement, every participant who submits a report should commit to review or comment on at least three submissions which are not their own.

Evaluation Criteria

Methods are scored as follows:

The model is evaluated on the full dataset using each of the following metrics (as implemented in disentanglement_lib [Link]):

- IRS

- DCI

- Factor-VAE

- MIG

- SAP-Score

The final score for a method is determined as follows:

- All participants’ methods are ranked independently according to each of the five evaluation metrics

- Those 5 ranks are summed up for each method to give the method’s final score (higher is better)

Teams whose reports do not satisfy basic requirements of clarity and thoroughness (as detailed above) will be disqualified.

Furthermore, the goal of this challenge is to advance the state-of-the-art in representation learning, hence we reserve the right to disqualify methods which are overly tailored to the type of data used in this challenge. By overly tailored methods we mean methods which will by design not work on slightly different problems, e.g. a slightly different mechanical setup for moving the objects.

The organizers may decide to change the computation of the scores for Stage 2. If so, this will be announced at the end of Stage 1.

Prizes are awarded to the participants in the order of their methods’ final scores (the lowest score wins), excluding participants who are not eligible for prizes. Prizes are awarded independently in each of the two challenge stages.

Prizes

In each of the two stages, the following prizes are awarded to the participants with the best scores.

Stage 1

- Winner: 3,000 EUR

- Runner-up: 1,500 EUR

- Third-place: 1,000 EUR

- Best Paper: 3,000 EUR

- Runner-up best paper: 1,500 EUR

Stage 2

- Winner: 3,000 EUR

- Runner-up: 1,500 EUR

- Third-place: 1,000 EUR

- Best Paper: 3,000 EUR

- Runner-up best paper: 1,500 EUR

The reports of the best-performing teams will be published in JMLR proceedings.

The winners are determined independently in each of the rounds. Winners of Stage 1 are not excluded from winning prizes in Stage 2.

We award two Brilliancy prizes for each stage to the best paper that were determined by the jury to be the most innovative and best described.

Cash prizes will be paid out to an account specified by the organizer of each team. It is the responsibility of the team organizer to distribute the prize money according to their team-internal agreements.

Eligibility

The organizers will not be able to transfer the prize money to accounts of any of the following countries or regions. (Please note that residents of these countries or regions are still allowed to participate in the challenge.) The same applies to candidates which are stated on the EU sanction list.

- The Crimea region of Ukraine

- Cuba

- Iran

- North Korea

- Sudan

- Syria

- Quebec, Canada

- Brazil

- Italy

Members/employees of the following institutions may participate in the challenge, but are excluded from winning any prizes:

- Max Planck Institute for Intelligent Systems

- ETH Zurich (Computer Science Department)

- Google AI Zurich

- AIcrowd

Reviewers of the paper “On the Role of Inductive Bias From Simulation and the Transfer to the Real World: a New Disentanglement Dataset” may participate in the challenge, but are excluded from winning any prizes

Further Rules

- Participants may participate alone or in a team of up to 6 people in one or both stages of the challenge.

- Individuals are not allowed to enter the challenge using multiple accounts. Each individual can only be part of one team.

- To be eligible to win prizes, participants agree to release their code under an OSI approved license.

- The organizers reserve the right to change the rules if doing so is absolutely necessary to resolve unforeseen problems.

- The organizers reserve the right to disqualify participants who are violating the rules or engage in scientific misconduct.

- The organizers reserve the right to disqualify any participant engaging in unscientific behavior or which harm a successful organization of the challenge in any way.

Organizing Team

This challenge is jointly organized by members from the Max Planck Institute for Intelligent Systems, ETH Zürich, Mila, and Google Brain.

The Team consists of:

- Stefan Bauer (MPI)

- Manuel Wüthrich (MPI)

- Francesco Locatello (MPI, ETH)

- Alexander Neitz (MPI)

- Arash Mehrjou (MPI)

- Djordje Miladinovic (ETH)

- Waleed Gondal (MPI)

- Olivier Bachem (Google Research, Brain Team)

- Sharada Mohanty (AIcrowd)

- Martin Breidt (MPI)

- Nasim Rahaman (Mila)

- Valentin Volchkov (MPI)

- Joel Bessekon Akpo (MPI)

- Yoshua Bengio (Mila)

- Karin Bierig (MPI)

- Bernhard Schölkopf (MPI)

Logos

# Sponsors

- Max Planck Institute for Intelligent Systems

- ETH Zürich

- Montreal Institute for Learning Algorithms

- Google AI

- Amazon

References

[1] Bengio, Y., Courville, A. and Vincent, P. Representation learning: A review and new perspectives. IEEE transactions on pattern analysis and machine intelligence, 35(8), pp.1798-1828. 2013.

[2] Suter, R., Miladinovic, D., Schölkopf, B., Bauer, S. Robustly Disentangled Causal Mechanisms: Validating Deep Representations for Interventional Robustness. International Conference on Machine Learning. 2019.

[3] Lesort, T., Díaz-Rodríguez, N., Goudou, J. F., Filliat, D. Disentangled State representation learning for control: An overview. Neural Networks. 2018.

[4] Barlow, H. B. Possible principles underlying the transformation of sensory messages. Sensory communication 1. 217-234. 1961.

[5] Olshausen, B. A., Field, D. J. Sparse coding of sensory inputs. Current opinion in neurobiology 14.4. 481-487. 2004.

[6] Gondal, M. W., Wüthrich, M., Miladinović, Đ., Locatello, F., Breidt, M, Volchkov, V., Akpo, J., Bachem, O., Schölkopf, B., Bauer, S. On the Transfer of Inductive Bias from Simulation to the Real World: a New Disentanglement Dataset. arXiv preprint arXiv:1906.03292. 2019.

[7] Locatello, F., Bauer, S., Lucic, M., Gelly, S., Schölkopf, B., Bachem, O. Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representations. International Conference on Machine Learning. 2019.

[8] Locatello, F., Abbati, G., Rainforth, T., Bauer, S., Schölkopf, B. and Bachem, O. On the Fairness of Disentangled Representations. arXiv preprint arXiv:1905.13662. 2019.

[9] Creager, E., Madras, D., Jacobsen, J.H., Weis, M.A., Swersky, K., Pitassi, T. and Zemel, R. Flexibly Fair Representation Learning by Disentanglement. International Conference on Machine Learning. 2019.

[10] Chartsias, A., Joyce, T., Papanastasiou, G., Semple, S., Williams, M., Newby, D., Dharmakumar, R. and Tsaftaris, S.A. Factorised spatial representation learning: application in semi-supervised myocardial segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 490–498. Springer. 2018.

Contact Us

Use one of the public channels:

- Gitter Channel : AIcrowd-HQ/disentanglement_challenge

- Discussion Forum

We strongly encourage you to use the public channels mentioned above for communications between the participants and the organizers. In extreme cases, if there are any queries or comments that you would like to make using a private communication channel, then you can send us an email at :

Participants

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email