Location

London, UK., GB

London, UK., GB

Badges

Activity

Challenge Categories

Challenges Entered

Multi-Agent Reinforcement Learning on Trains

Latest submissions

See All| failed | 114228 | ||

| failed | 114149 | ||

| failed | 114146 |

Find all the aircraft!

Latest submissions

Disentanglement: from simulation to real-world

Latest submissions

Sample-efficient reinforcement learning in Minecraft

Latest submissions

Multi Agent Reinforcement Learning on Trains.

Latest submissions

Latest submissions

Latest submissions

Multi-Agent Reinforcement Learning on Trains

Latest submissions

See All| failed | 117943 | ||

| failed | 117814 | ||

| failed | 117806 |

| Participant | Rating |

|---|---|

shivam

shivam

|

136 |

nilabha

nilabha

|

122 |

vrv

vrv

|

0 |

| Participant | Rating |

|---|---|

nilabha

nilabha

|

122 |

-

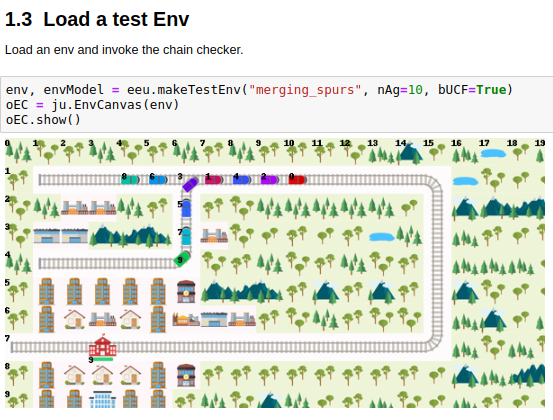

inside-job FlatlandView

Flatland

How can I create a rail network, which is just a simple circle?

Almost 5 years agoHi very sorry for the 6 week delay(!), someone at AICrowd very kindly just brought your note to my attention.

Yes it appears the author of the jpy_canvas extension removed it from his public github some months ago; I think it had stopped working with the latest jupyter some time earlier. I think it’s just about possible to get things working by pinning to old versions but that’s not the way any of us want to go.

At some point I expect we will refresh the editor to work with the newer, more maintained canvas extension ipycanvas (github) but there are no immediate plans for this.

We’ve done a couple of bits of related work in the last 6-9 months:

- one of which involved converting some real layout data into flatland environments.

- using manually coded coordinate strokes (like mouse-strokes in the editor) to create specific environments.

These both used some of the old editor code to handle some of the logic of joining rails etc. (The older hand-coded test environments literally had to have explicit decimal / binary numbers representing the transitions)

I’ll try to put some information together, maybe in a branch. Please ping us again here or in an issue if you’re still interested in this.

Many thanks!

Build problem with the current `environment.yml` file

About 5 years agoRelated question - can I upgrade to python 3.7 by simply specifying say 3.7.9?

How can I create a rail network, which is just a simple circle?

Over 5 years agoHi @fhohnstein - there are a couple of notebooks in the flatland repo which might be of interest.

Scene-editor.ipynb should allow you to draw some rails by dragging the mouse. There are some buttons to load & save etc. It’s a bit broken when it comes to adding agents and targets though.

test-collision.ipynb creates a simple environment on-the-fly using env_edit_utils.py which is related to the editor. It applies simulated mouse-strokes, specified by (row,column) co-ordinates, similar to how the editor works. The one used in the test case is called “concentric_loops” and is defined in ddEnvSpecs like this:

# Concentric Loops

"concentric_loops": {

"llrcPaths": [

[(1,1), (1,5), (8, 5), (8,1), (1,1), (1,3)],

[(1,3), (1,10), (8,10), (8,3)]

],

"lrcStarts": [(1,3)],

"lrcTargs": [(2,1)],

"liDirs": [1]

},

When defining the “strokes” (llrcPaths means list of list of row,col paths to me  ) you need to make the ends of the strokes overlap a bit, and then it can work out how to join the rails. (This applies if you’re doing it by hand in the editor too.)

) you need to make the ends of the strokes overlap a bit, and then it can work out how to join the rails. (This applies if you’re doing it by hand in the editor too.)

Hope that helps!

🚉 Questions about the Flatland Environment

Over 5 years ago@beibei remember the default rendering displays the agents one-step behind. This is because it needs to know both the current and next cell to get the angle of the agent correct. (Or maybe you’ve switched to a different “AgentRenderVariant”, I don’t know)

With unordered close following (UCF) the index of the agents shouldn’t matter unless two agents are both trying to enter the same cell, in which case the lower index agent wins.

So ignoring the other agents (27?) if:

- 6 is trying to move (11,23)->(11,24) and

- 2 is trying to move (10,23)->(11,23)

…then that looks like a UCF situation; it should work out that 2 is vacating the cell which 6 is entering, and I think it should work.

OTOH it’s possible that 2’s action to turn left came too late. I think 2 should say “MOVE_LEFT” (action 1) when it’s in (10,24), just before it moves into (10,23).

Maybe you’ve resolved this one already

🚃🚃 Train Close Following

Over 5 years agoHi, there’s an option show_debug when you create the RenderTool. Set it to true. And the row/col numbering is show_rowcols - but here the option appears in RenderTool.render_env.

Sorry for the inconsistency…

🚃🚃 Train Close Following

Over 5 years agoHey @adrian_egli, sorry just seen this.

I made a simpler way (I think) to create test cases using some logic from the editor, allowing you to paint “strokes” (like mouse or brush strokes) on the env. It isn’t perfect but I think it’s easier than assembling rail junctions by number and rotation; also in a way it’s easier than the editor because (1) no extra files involved and (2) it was always a bit tricky to add agents using the editor.

It’s in env_edit_utils.py (this example is not yet committed):

# two loops

"loop_with_loops": {

"llrcPaths": [

# big outer loop Row 1, 8; Col 1, 15

[(1,1), (1,15), (8, 15), (8,1), (1,1), (1,3)],

# alternative 1

[(1,3), (1,5), (3,5), (3,10), (1, 10), (1, 12)],

# alternative 2

[(8,3), (8,5), (6,5), (6,10), (8, 10), (8, 12)],

],

# list of row,col of agent start cells

"lrcStarts": [(1,3), (1, 13)],

# list of row,col of targets

"lrcTargs": [(2,1), (7,15)],

# list of initial directions

"liDirs": [1, 3],

}

(you need to remember to overlap the strokes a bit, otherwise they will not join properly)

🚃🚃 Train Close Following

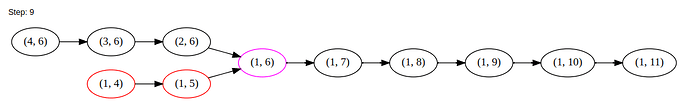

Over 5 years agoHey Adrian - there is an example in the notebook Agent-Close-Following.ipynb.

It sets up a little test env, runs a few steps / actions, and renders the chains / trains of adjacent agents using GraphViz.

At step 9 you can see that the agents in cells (1,4) and (1,5) are blocked by the others in front, and the lower-index agent in (2,6). (I couldn’t find an easy way to make GraphViz render the agent numbers in the cells, as well as the row, col node identifiers  )

)

For the “close-following” (aka unordered close following or UCF) we set up a digraph for every step, and the edges are the moves the agents want to make. This is of course different to creating a (static) graph or digraph for the rails.

Apologies to anyone struggling to install GraphViz btw. If it proves to be a problem we will improve our instructions. GraphViz is not necessary for the operation of the env but it was useful for making the graphical test cases.

Editor.py missing package jpy_canvas

Over 4 years agoHi @oussama_a - in fact the missing repo from GitHub has recently been kindly put back for us by @who8mylunch (Pierre). I believe the flatland conda install instructions should work. And running the jupyter-canvas-widget installation instructions worked for me in a virtualenv using the latest jupyter packages. (It creates a package called jpy_canvas I think. I’m away from a screen right now)

The editor needs a bit of tidying but I think you should be able to draw a track and save it. I’ll keep an eye out if you would like to report any problems. Thanks!