Location

BR

BR

Badges

Activity

Challenge Categories

Challenges Entered

Measure sample efficiency and generalization in reinforcement learning using procedurally generated environments

Latest submissions

See All| graded | 94644 | ||

| graded | 94643 | ||

| graded | 93847 |

Multi-Agent Reinforcement Learning on Trains

Latest submissions

A benchmark for image-based food recognition

Latest submissions

See All| graded | 32398 | ||

| graded | 32370 | ||

| graded | 32272 |

Sample-efficient reinforcement learning in Minecraft

Latest submissions

Robots that learn to interact with the environment autonomously

Latest submissions

Disentanglement: from simulation to real-world

Latest submissions

Sample-efficient reinforcement learning in Minecraft

Latest submissions

Multi Agent Reinforcement Learning on Trains.

Latest submissions

Project 2: Road extraction from satellite images

Latest submissions

| Participant | Rating |

|---|

| Participant | Rating |

|---|

NeurIPS 2020: Procgen Competition

Spot instances issues

Over 5 years agoIn a submission that I made @jyotish mentioned that submissions were being trained on AWS spot instances.

This means that the instance can shut down anytime.

Checkpoints of the models are made periodically, and training is then restored.

The problem with that is that many important training variables/data are not stored in checkpoints (e.g. the data in a replay buffer) and this leads to unstable training which vary depending your luck of not being interrupted.

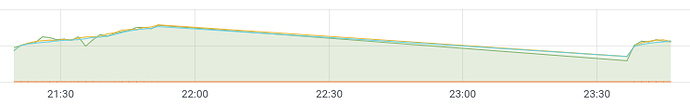

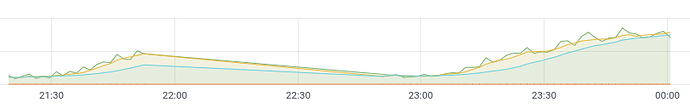

Here is a couple of plots of the training mean return of one of my submissions. The big stretched lines are due to faraway datapoints (agent being interrupted and then restored.)

What does the Aicrowd team recommends here? Storing the replay buffer and any important variables whenever checkpointing?

Onto the different tracks

Over 5 years agoThe competitions is to have two tracks, on sample efficiency and generalization, so how will the submissions be scored? From what I understood, every submission of round 2 will be evaluated in both tracks and the top 10 submissions of each track will be selected for a more thorough evaluation.

Running the evaluation worker during evaluations is now optional

Over 5 years agoGreat! Although, is there any way to map our own metrics in the grafana dashboard (e.g. training mean return)?

Edit: Found it, there is an option in the dashboard to plot any metrics your code outputs in each training iteration. Really useful

Rllib custom env

Over 5 years agoIt probably will not. Rllib counts the steps based on the counter in each worker, which operates on the outmost wrapper of the environment. It’s also pretty easy to go over this number if implementing a custom trainer, so one must take care to not go over it.

Food Recognition Challenge

Instructions, EDA and baseline for Food Recognition Challenge

About 6 years agoI haven’t submitted yet, I see that in the baseline you remove the .json extension from the output path, is this necessary for the submission?

Instructions, EDA and baseline for Food Recognition Challenge

About 6 years agoThanks for the feedback, fixed my issue.

Instructions, EDA and baseline for Food Recognition Challenge

About 6 years agoWhen trying to run the model for inference I get the error:

Traceback (most recent call last):

File "mmdetection/tools/test.py", line 284, in <module>

main()

File "mmdetection/tools/test.py", line 233, in main

checkpoint = load_checkpoint(model, args.checkpoint, map_location='cpu')

File "/opt/conda/lib/python3.6/site-packages/mmcv/runner/checkpoint.py", line 172, in load_checkpoint

checkpoint = torch.load(filename, map_location=map_location)

File "/opt/conda/lib/python3.6/site-packages/torch/serialization.py", line 387, in load

return _load(f, map_location, pickle_module, **pickle_load_args)

File "/opt/conda/lib/python3.6/site-packages/torch/serialization.py", line 564, in _load

magic_number = pickle_module.load(f, **pickle_load_args)

_pickle.UnpicklingError: invalid load key, 'v'.

From trying to load the weights.

I get the same error trying to use any other of the weights, besides the set ‘epoch_22.pth’

Questions about the competition timeline

Over 5 years agoDipam is correct. There is a trade-off between the two tracks, and personalized solutions for each track do perform better in each track.

I also agree with the two choices for scoring. I would be against squashing both tracks into one.

During the competition I mostly relied on the AIcrowd platform for evaluating solutions, and seldom trained them locally. As so, I did not optimize for the generalization track.