Location

FR

FR

Badges

Activity

Challenge Categories

Challenges Entered

Improve RAG with Real-World Benchmarks

Latest submissions

Revolutionise E-Commerce with LLM!

Latest submissions

Evaluate Natural Conversations

Latest submissions

Small Object Detection and Classification

Latest submissions

See All| graded | 243527 | ||

| graded | 242975 | ||

| graded | 242973 |

Latest submissions

| Participant | Rating |

|---|

| Participant | Rating |

|---|

MosquitoAlert Challenge 2023

Mosquito Alert Challenge Solutions

Over 2 years agoHi,

Thanks a lot to the organizers, sponsors and AICrowd team for this quite interesting challenge!

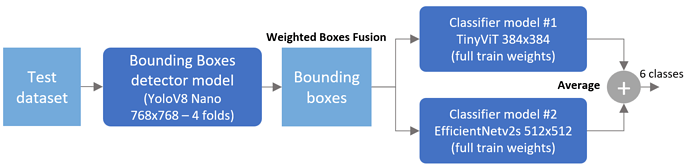

My solution that reached 3rd place on both public and private LB is based on two stages:

- Stage1: A mosquito bounding boxes detector model based on Yolo.

- Stage2: An ensemble of two mosquito classifiers models. First model is based on ViT architecture and second on EfficientNetv2 architecture.

The two main challenges in this competition were the 2 seconds CPU per image runtime limit and the high imbalance for rare classes in the training dataset. Such constraints limit the total number of models we could ensemble, TTA options, image size and size of the possible models.

After several experiments, my conclusion was that a single model “on the shelf” that could do both bounding boxes + classification won’t perform as good as two dedicated separate models. For bounding boxes I’ve ensembled 4 YoloV8 nano models (converted to OpenVino to speed up inference) trained on a single class. For mosquitos I’ve trained two classifiers with different architectures and image size. I got some boost/stability on classification score with the following items in the training procedure:

- Hard augmentations including Mixup + CutMix

- Averaging weights with EMA

- Label smoothing

- Creating a 7th class (no mosquito) with background images generated from training images without mosquito. I believe that blood + fingers was prone to confuse the model.

- External data that brings more examples on rare classes. I’ve only used a limited subset of what was provided in the external datasets thread because I find it quite noisy.

Cross validation for each model single was F1=0.870/0.860 (best fold with 0.891). However, it was not possible to use them in inference due to time limit. So, I’ve decided to train a full fit model to benefit from all data in a single model. Basic ensembling of both classifiers (no TTA) reached 0.904 on public LB and 0.915 on private LB.

What did not work (or not improve):

- Increasing image size to 1024x1024 does not bring more information to the models

- Forcing the aspect ratio to 1.0 on resize did not help

- Single stage 6 classes Yolo model (both regular and RTDETR)

- Single stage 6 classes EfficientDet model

- SWA (last 5 epochs or best 5)

- Model pruning (to speed up inference)

- Post Processing: Add/remove margin to predicted BB

- Training based on mosquito bodies only. I’ve created a mosquito body extractor but my conclusion is that relying on head, thorax, and dorsal is not enough. We have predictive power in mosquito legs and wings. It might be obvious for the experts but from past experiences on other species it was worth trying.

Cheers.

External datasets used by participants

Over 2 years agoI’m using the external datasets above (inaturalist subset + kaggle), shared by other participants now.

External datasets used by participants

Over 2 years ago@OverWhelmingFit @tfriedel Are we sure that your external data does not contain any subset of Mosquito Alerts (outside the data provided in train dataset)? As it’s forbidden in the rules.

External datasets used by participants

Over 2 years agoYes, really but I’m quite sure I’m overfitting the public LB.

External datasets used by participants

Over 2 years agoHi guys,

Thanks for sharing these links! No external data so far for me but I plan to try some of the ones you’ve shared soon.

Submissions are quite unstable

Over 2 years agoHi, not on my side. I submit the same code twice and usually it works when I’m quite sure I’m under 1200ms. Everything above 1200ms fails.

🚨 Important Updates for Round 2

Over 2 years agoThey said:

So private score is already known but hidden. Final testing is already done, it cannot fail.

The submission needs japonicus-koreicus class not the japonicus/koreicus

Over 2 years agoFor the initial images, it’s easy to workaround with warmup. Usually the problem (timeout I guess) is after 40/50min once submitted. My local CPU is 4x faster than AICrowd so I compare only between submissions on AICrowd as we’ve logs of “MosquitoalertValidation” stage.

The submission needs japonicus-koreicus class not the japonicus/koreicus

Over 2 years agoOMG! Almost 2 weeks and more than 70 submissions to try to understand why CV/LB gap was so huge. My first assumption was that I messed up the labels mapping on my side but I did not take time to fully probe LB to detect this issue. Round1 baseline notebook here is just wrong for round2.

class_labels = {

“aegypti”: 0,

“albopictus”: 1,

“anopheles”: 2,

“culex”: 3,

“culiseta”: 4,

“japonicus/koreicus”: 5

}

One has to notice the correct labels in random model here:

self.class_names = [

‘culex’,

'japonicus-koreicus’,

‘culiseta’,

‘albopictus’,

‘anopheles’,

‘aegypti’

]

Without your finding @saidinesh_pola I was on the way to conclude that test set distribution was totally different than train set and I was going to give up the lottery.

Thanks again! The competition can start now.

BTW: The submission issue I’ve reported is still here. It happens around 2 on 10 submissions for me. Same code submitted twice either fail or succeed. The 2 second limit check per image is not stable. Even, I think it’s not 2 seconds but more 1.2 second limit to make a submission work safely. So, one should try to submit the same more times to make sure it works/fails really.

YOLO-NAS model allowed?

Over 2 years agoHi,

@jgarriga65 Is YOLO-NAS model allowed for this challenge?

I believe answer is no due to licensing, could you confirm?

Thanks.

Submissions are quite unstable

Over 2 years ago@dipam Any status on the 2 submissions I provided?

With the new 2 seconds limit, I can see my model (executed on a dummy 4000x3000 image) that a takes 1125ms, and that succeeds MosquitoalertValidation step but that fails MosquitoalertPrediction step after a few moment. For instance:

I agree with @tfriedel that average after final evaluation would be better.

Outdated system image for submission

Over 2 years ago@dipam provided the solution (docker file) to allow python 3.10 and it works fine.

Thanks again.

Details below:

Remote: fatal: pack exceeds maximum allowed size

Over 2 years agoSee this thread, I got the same issue:

Submissions are quite unstable

Over 2 years agoTotally agree on that average would be much better. You can verify the 1s per image assumption by adding/checking some logs during the Validation stage.

Submissions are quite unstable

Over 2 years agoIt looks the evaluation of a submission is quite unstable, same code submitted twice either fail or succeed. It should be related to cluster workload and 1 second requirement check. The same image that took 800ms in one submission might take 1200ms in another.

Example below:

Not sure how the organizers could make it better but it make things more difficult to us.

Outdated system image for submission

Over 2 years agoHi,

System image for submission comes with python 3.7 which is outdated to benefit from industry standards in ML domain. Could you update to at least 3.10 as it’s out of our control? It would be benefit to everybody especially as we’ve to infer each image in less than 1 second.

Even the phase2-starter-kit is broken on inference:

ultralytics 8.0.151 requires numpy>=1.22.2, but you'll have numpy 1.21.6 which is incompatible.

I’ve created an issue on GitLab:

Thanks.

Submission script error

Over 2 years agoAnswering to myself.

I’ve started again from scratch without modifying projet name when forking and carefully install git lfs before any commit and track all pt and pth files:

git lfs install

git lfs track "*.pt"

git lfs track "*.pth"

git add .gitattributes

And make sure that:

git remote add aicrowd git@gitlab.aicrowd.com:MYACCOUNT/mosquitoalert-2023-phase2-starter-kit.git

Then I added/commited my large model file:

git push aicrowd master

And it worked in GitLab. I’ve created submission manually with tag option and not with submit script.

Submission script error

Over 2 years agoAlso checked:

and:

No luck.

When I run git lfs ls-files comannd I can see my model but it fails on git push:

0f11b1dd84 * my_models/yolo_model_weights/mosquitoalert-yolov5-baseline.pt

b36d4aa091 * my_models/MYMODEL/weights/best_model.pth

the only change is the addition of best_model.pth file, previous push was working.

my .gitattributes:

*.pth filter=lfs diff=lfs merge=lfs -text

*.pt filter=lfs diff=lfs merge=lfs -text

Mosquito Alert Challenge Solutions

Over 2 years agoLower compared to other competitors? Possible, large models would have performed better for sure, but I’ve also removed a lot external data because I didn’t take time to implement a cleanup procedure. I’ve generated bounding boxes pseudo labels based on Yolo model high score confidence, everything below 0.7 confidence has been dropped.