🚩New Updates

Nov 8th -- Final Result Out! Check it out on the Leaderboard.

Oct 30th --PvP submission deadline extended to 2022-11-02 06:59 (UTC)

Oct 13th -- Imitation Learning baseline 2.0 released with a 0.7 top1 ratio achieved.

Oct 10th -- PvP Leaderboard starts updating on a daily basis for rapid iteration.

Oct 1st -- See how to submit an offline learning solution in this versioned Starterkit.Sep 29th-- Unplugged Prize established with Replay dataset and IL Baseline released.

Sep 27th -- AWS Credits available for teams lacking hardware support.

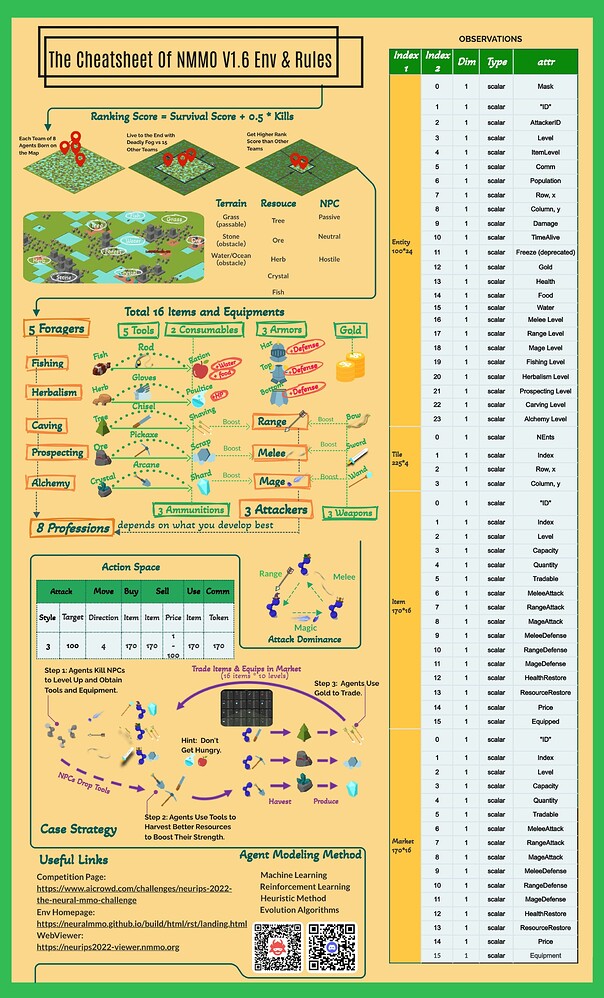

Sep 21st -- NMMO V1.6 Cheatsheet for a quick check.

Sep 16th -- RL baseline 2.0 released with a model checkpoint for rollout and submission.

🚀Starterkit - Everything you need to submit.

🌳Env Rules - Detailed rules about the challenge environment.

📃Project Page - Documentation, API reference, and tutorials.

📓RL Baseline 2.0 - A torchbeast-based RL baseline nailing down a 0.5 top 1 ratio in one-day training with a GPU on PC.

📹WebViewer - A web replay viewer for our challenge.

📞Support- The support channel could help you if you have any questions, issues, or something that needs to be discussed.

🎈Final Result

🔛Introduction

Specialize and Bargain in Brave New Worlds!

Forage, fight, and bargain your way through procedurally generated environments to outcompete other participants trying to do the same.

Your task is to implement a policy--a controller that defines how an agent team will behave within an environment--to score a victory in a game. There are few restrictions: you may leverage scripted, learned, or hybrid approaches incorporating any information and any available hardware (cloud credits are available).

The tournament consists of two stages: PvE and PvP. Your agents will fight against built-in AIs in the former and other user submissions in the latter. The competition takes the form of a battle royale: defeat your opponents and be the last team standing. You may consider all of the environment's various mechanics, skills, and equipment as tools to help your agents survive, but there are no longer any specific objectives in each of these categories. In the end, the top 16 teams will battle it out in the same environment.

🏔️Competition Structure

The Neural MMO Platform

Neural MMO is an open-source research platform that simulates populations of agents in procedurally generated virtual worlds. It is inspired by classic massively multiagent online role-playing games, MMORPGs or MMOs for short, as settings where lots of players using entirely different strategies interact in interesting ways. Unlike other game genres typically used in research, MMOs simulate persistent worlds that support rich player interactions and a wider variety of progression strategies. These properties seem important to intelligence in the real world, and the objective of this competition is to spur research towards increasingly general and cognitively realistic environments. Please find a comprehensive introduction to the NMMO environment here.

Competiton Environment Setting

In this challenge, your policy will control a team of 8 agents and will be evaluated in a battle royale against other participants on 128x128 maps with 15 other teams for 1024 game ticks. Towards the end of each episode, an oh-so-original death fog will close in on the map, forcing all agents towards the center. An agent can observe a local terrain of 15x15 tiles and state info about itself, other agents, and NPCs within this window. Each agent may take multiple actions per tick--one per category: move, attack, use, sell, and buy. Please find a detailed challenge environment tutorial here and Game Wiki here.

The cheatsheet of NMMO V1.6 for quick reference:

COMPETITION Rules

You aim is to defeat and outlive your opponents. How you accomplish this is up to you. One big rule: no collusion. You cannot team up with other submissions. The competition will run on two settings, with PvP as the main stage and PvE as the sub-stages. A skill rating algorithm will update your rank based on opponents you defeat and lose against.

PvE Track

PvE is short for player versus environment, meaning the participant's policy competes with built-in AI teams. The PvE track will unfold in 2 stages with increasingly difficult built-in AIs.

Stage 1: Your policy will be thrown into a game with 15 scripted built-in AI teams immediately upon submission and be evaluated 10 rounds in a row. The result of each submission will be presented as a Top1 Ratio accounting for how many times out of 10 you won (see Evaluation Metrics). Each built-in AI team in stage 1 consists of 8 agents with 8 respective skills and its policy is open-sourced so the evaluation environment is accessible during your training procedure. Stage 1 is aimed at helping new participants get familiar with the challenge quickly.

Stage 2: Achieving 0.5 Top 1 Ratio in stage 1 qualifies your policy for stage 2. The rules are all the same except the built-in AIs are elaborately designed by organizers and trained by a deep reinforcement learning framework developed by Parametrix.AI. Stage 2 is aimed at further boosting the challenge's intensity--these bots absolutely annihilate the stage 1 built-in AIs, and defeating them is a significant achievement.

PvP Track

PvP is short for player versus player, meaning that the participant policy competes with other participants' policies.

Matchmaking: Achieving 0.5 Top 1 Ratio in stage 1 qualifies your policy for the PvP stage. Once per week (adjusting by the number of participants), we will run matchmaking to throw your latest qualified policy into games against other participants’ submissions. Your objective is unchanged. We will use match results to update a skill rating (SR) estimate that will be posted to the leaderboards.

Final Evaluation: In the last week of the competition, we will run additional matches to denoise scores. Your latest qualified policy will participate in at least 1,000 games. The top 16 submissions will be thrown into an additional 1,000 games together to determine the final ranking of the top 16 policies.

At the submission deadline on October 30th, additional submissions will be locked. From October 31st to November 15th, we will continue to run the PvP tournament with more rollouts. After this period, the leaderboard will be finalized and used to determine who gets respective ranking-based prizes.

🏆Awards and Prizes

Several awards will be issued to PvE and PvP winners, either in monetary incentives or in the form of a certificate.

PvE-Pioneer Award

Pioneer Prize will be awarded to the first participant to achieve a 1.0 Top1 Ratio in the built-in AI environment of each PvE stage.

- The first participant to reach 1.0 Top1 Ratio in Stages 1 and 2 will receive $300 and $700 respectively;

PvE-Sprint Award

Sprint Award Certificates will be granted to the top 3 participants on the PvE leaderboard released at fortnightly intervals.

PvP-Main Prize

Monetary prizes will be distributed to the top 16 teams on the PvP Leaderboard at the end of the competition. The 1st, 2nd, and 3rd place participants will share the Gold Tier title and receive $6,000, $3,000, and $1,500 respectively; The 4th to 8th place participants will share the Silver Tier title and receive $700 per team; The 9th to 16th place participants will share the Bronze Tier title and each receives $300. All of the top 64 teams will receive customized certificates as proof of their ranking.

PvP-Gold Farmer Prize

The $1000 Gold Farmer Prize will be awarded to the team best at making money or reserving gold. We hope the setting of this prize will promote behaviors like killing and selling.

PvP-Tank Prize

The $1000 Tank Prize will be awarded to the team that receives the highest total damage. We hope the setting of this prize will inspire more unexpected policies.

🆕PvP-Unplugged Prize

The $1000 Unplugged Prize will be awarded to the team that gets the highest SR scores with an Offline Learning Solution only in the PvP final. The prize winner will be required to submit their training code to the organizer for verification and to secure a reproducible result at the end of the competition. Please note that any form of online RL method is not eligible for this prize, and the data you could use includes but is not limited to all replay data on the competition website.

🧮Evaluation Metrics

Score Metrics

Your submission score will be calculated based on the number of other agents (not NPCs) you defeat (inflict the final blow) and how long at least one member of your team remains alive.

1 player kill nets you 0.5 points. The team's total kill score is the sum of kills across the team.

Survive longer than your opponents to score in this category. The team's survival time depends on when the last agent dies. Specifically, the survival scores in each tournament from high to low are 10, 6, 5, 4, 3, 2, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0 and will be assigned to the team surviving the longest, the second longest, the third longest, and so on respectively. With team survival time being equal, the team with more survivors ranks higher; with the number of survivors being equal, the average level of your agents will be taken into account; with the above factors all being equal, the tied teams will split the ranking scores.

Example: if you team lands the last hit on 3 agents and survives 2nd longest, you will receive 3*0.5+6 = 7.5 points.

Ranking Metrics

The PvE Leaderboard is sorted by Top 1 Ratio, with ties broken by submission time: the early bird gets the worm. Since performance will vary against different opponents, we compute SR from matchmaking to roughly rank the PvP leaderboard before the final evaluation. Since opponents for the final evaluation are fixed to the top 16 submissions, SR is not required, and ranking will be determined using only the average score.

📆Competition Timeline

- August 18th, 2022 - PvE stage 1 begins, Starterkit releases and the submission system opens

- August 31st, 2022 - PvP begins

- September 22nd, 2022 - PvE stage 2 begins

- October 24th, 2022 - Entry and team formation deadline

- October 30th, 2022 - Final submission deadline

- October 31st, 2022 - November 15th,2022 – Final PvP evaluation

- November 16th, 2022 - Winners announced

Note: The competition organizers reserve the right to update the competition's timeline if they deem it necessary. All deadlines are at 11:59 PM PST on a corresponding day unless otherwise noted.

📔Manual Center

StarterKit

Neural MMO is fully open-sourced and includes scripted and learned baselines with all associated code. We provide a starter kit with example submissions, local evaluation tools, and additional debugging utilities. The documentation in the starter kit provided will walk you through installing dependencies and setting up the environment. Our goal is to enable you to make your first test submission within a few minutes of getting started.

2022 NeurIPS NMMO Env Tutorial

Please find Environment Tutorial through this link.

📞Support Channels

Please feel free to seek support from any of the following channels:

🔗Discord Channel Discord is our main contact and support channel. Any questions and issues can also be posted on the discussion board.

💬AI Crowd Discussion Channel AI Crowd Discussion board provides a quick question, discussion, and issue method.

🧑🏻🤝🧑🏻WechatGroup Our second support channel is Tencent WeChat Group. Please add wechat account “chaocanshustuff” as a friend or scan the QRcode to join the group.

🤖Team Members

Joseph Suarez (MIT)

Jiaxin Chen (Parametrix.AI)

Bo Wu (Parametrix.AI)

Enhong Liu (Parametrix.AI)

Hao Cheng (Parametrix.AI)

Xihui Li (Parametrix.AI, Tsinghua University)

Junjie Zhang (Parametrix.AI, Tsinghua University)

Yangkun Chen (Parametrix.AI, Tsinghua University)

Hanmo Chen (Parametrix.AI, Tsinghua University)

Henry Zhu (Parametrix.AI)

Xiaolong Zhu (Parametrix.AI)

Chenghui You (Parametrix.AI, Tsinghua University)

Jun Hu (Parametrix.AI)

Jyotish Poonganam (AIcrowd)

Vrushank Vyas (AIcrowd)

Shivam Khandelwal (AIcrowd)

Sharada Mohanty (AIcrowd)

Phillip Isola (MIT)

Julian Togelius (New York University)

Xiu Li (Tsinghua University)

Participants

Continue with Google

Continue with Google

Sign Up with Email

Sign Up with Email