Location

IN

IN

Badges

Activity

Challenge Categories

Challenges Entered

Evaluate Natural Conversations

Latest submissions

Small Object Detection and Classification

Latest submissions

Understand semantic segmentation and monocular depth estimation from downward-facing drone images

Latest submissions

Audio Source Separation using AI

Latest submissions

See All| graded | 209116 |

Identify user photos in the marketplace

Latest submissions

Using AI For Building’s Energy Management

Latest submissions

Interactive embodied agents for Human-AI collaboration

Latest submissions

Behavioral Representation Learning from Animal Poses.

Latest submissions

ASCII-rendered single-player dungeon crawl game

Latest submissions

Measure sample efficiency and generalization in reinforcement learning using procedurally generated environments

Latest submissions

See All| graded | 94599 | ||

| graded | 94551 | ||

| graded | 93732 |

3D Seismic Image Interpretation by Machine Learning

Latest submissions

Multi-agent RL in game environment. Train your Derklings, creatures with a neural network brain, to fight for you!

Latest submissions

5 Problems 15 Days. Can you solve it all?

Latest submissions

Latest submissions

See All| graded | 200973 | ||

| graded | 200972 |

| Participant | Rating |

|---|---|

priteshgohil

priteshgohil

|

0 |

nachiket_dev_me18b017

nachiket_dev_me18b017

|

0 |

cadabullos

cadabullos

|

0 |

| Participant | Rating |

|---|

Data Purchasing Challenge 2022

IMPORTANT: Details about end of competition evaluations 🎯

Almost 4 years ago@ArtemVoronov Its intentional. Please select 4.

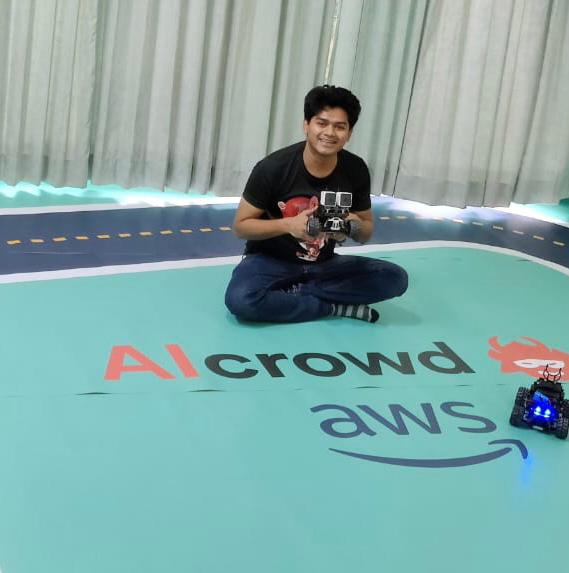

NeurIPS 2021 AWS DeepRacer AI Driving Olympics Cha

Ideas for better sim2real transfer

About 4 years agoThis was your last successful submission on 24th November which is already updated since a few days. Failed submissions are not run on the real track. But seems like the your submission was consistently turning towards the right side so the score is lower than your previous submission.

Please check all your other successful submissions

after 20th November and let me know in case any are missing, we were running the best submission per user per day until recently. We’ll run any older ones in case any are missing.

Please let me know if I can help with anything else, I’ll try my best but might be bit delayed since I’m travelling today.

Regarding evaluation criteria for online submissions

About 4 years agoSorry I missed reply to this this post earlier.

The reward function for both the rounds is staying close to the center, this is a proxy reward but its good enough as the action space does not allow stopping. The score on the simulation leaderboard is indeed the mean cumulative reward over multiple episodes. The maximum possible reward in both rounds may vary as the tracks are different.

But the main idea of the competition is the sim2real transfer and the formula for the real track is based on waypoints crossed and time. The final prizes are based on the real track leaderboard and a small weightage on the simulation scores. I’ll make a separate post to clarify the formula for the real track.

Round 2 is live!

About 4 years agoHi Everyone,

The much awaited Round 2 of the Deepracer Challenge is now live. Submissions will now be run on a real deepracer track everyday until November 30th.

The submission flow will be a follows:

Each team is allowed upto 5 successful submissions everyday, these will run on the Round 2 simulator.

The top scoring submission from each team each day will be run on the real track.

Since the time remaining for Round 2 is short, we encourage everyone to train their models on the Round 2 simulator as soon as possible.

There will be no obstacles on the track, but sim2real transfer will not be a trivial task. Be on the lookout for on board videos from your submissions to help you fine tune your solutions. All the best!!

⏰ Round 1 extended till Nov. 10th!

Over 4 years agoWe’re in the process of setting up the track, thanks for the patience.

Updates for starter kit

Over 4 years agoHi @asche_thor

This used to be an older issue but was fixed, are you on the latest version of the env docker release

Can you please pull the latest docker and check once.

Flatland

Some questions ahout the agents' speed

Over 4 years agoSeems I’d missed answering the question on varying speeds, thanks @sa7show for the answer. Indeed you’re right the speeds for each train are decided at the start of the episode and stays fixed throughout the episode.

NeurIPS 2021 - The NetHack Challenge

How to revise “Select Submissions” form?

Over 4 years ago@fxofxo You can make 3 new submissions in round 2 all of which will be considered for final evaluation, if this is not sufficient let us know we can arrange for changing old ones.

Example of using non-neural model

Over 4 years agoHi @pajansen

The env returns the observations that are already separated and encoded into a dictionary for convenience, this has nothing to do with neural net. Is there a particular format you need?

Colab Notebook including submission and working ttyrec recording

Over 4 years agoHi @charel_van_hoof, could you try once with git bash on Windows. I’ll try to check but I think nle is currently not support on windows. But just the ttyplay2 script might work.

IITM RL Final Project-b5d2e6

Submission Error : Inference Failed

Over 4 years agoSeems like the error is due to RAM usage of your code. The evaluations run with a limit of 4 GB RAM, please make sure to run within this limit.

Some garbage collection code in python might help.

Submission limit

Over 4 years agoYou should be able to test everything locally then submit.

Can you please share why the extra submissions are needed?

Episode Count in Runner

Almost 5 years agoBSuite gives the limit of 10000 as a fair amount episodes needed to converge.

Generally speaking, to have a level playing field among all competitors, while also not making it too easy, some constraint has to he applied. In this case, the number of episodes serves that role.

I know the number 10000 may be arbitrary, so if enough students feel it should be increased, we’ll do it. For now please try to improve your algorithm to get the highest score with 10000 episodes.

Best Regards,

Dipam

IIT-M RL-ASSIGNMENT-2-GRIDWORLD

Welcome to your 2nd Assignment!

Almost 5 years agoUnfortunately the choice of giving extra test cases is not upto me, please talk to the TAs.

In my opinion the test cases provided are sufficient, please review your code properly.

Welcome to your 2nd Assignment!

Almost 5 years agoCan you check if your local scoring cell is working without any error.

In case your local scoring cell gives an error its probably because the output format is wrong, check the targets file for the output format.

If your local scoring cell gives no error please let me know.

Welcome to your 2nd Assignment!

Almost 5 years agoSounds like a formatting issue with the code on your end, since its not a general issue that affects all students I encourage you to find the bug on your own. With correct format you should get decimals for all algorithms in the local scoring code. Look at the targets for example of the format.

Do let me know in case the problem still persists after you’ve checked it thoroughly.

Welcome to your 2nd Assignment!

Almost 5 years agoIt looks like the error says you need to accept the challenge rules. Can you please accept the rules then try submitting again.

IIT-M RL-ASSIGNMENT-2-TAXI

Unable to submit post 20th april

Almost 5 years agoWe recently fixed this, can you close the notebook and run again from start, that should fix it.

Multi-Agent Behavior: Representation, Model-17508f

Can we use training data from task 1 or 2 for task 3?

Almost 5 years agoHi @sungbinchoi

Yes, you can use training data of Task 1 for 2 and 3. Feel free to use all the data at your disposal.

You can even use some unsupervised learning on the test sequences.

Notebooks

-

[Explainer] - EDA of Seismic data by geographic axis Here’s my EDA notebook on how the seismic data varies by geographic axis, along with some ideas for training.dipam_chakraborty· Over 5 years ago

[Explainer] - EDA of Seismic data by geographic axis Here’s my EDA notebook on how the seismic data varies by geographic axis, along with some ideas for training.dipam_chakraborty· Over 5 years ago

IMPORTANT: Details about end of competition evaluations 🎯

Almost 4 years agoHi @chuifeng

There will be no live submissions with these changes. You will have to select 2 of your submissions from Round 2 for the final evaluations. Form and instructions selecting submissions will be provided before the competition ends.