Activity

Challenge Categories

Challenges Entered

Trick Large Language Models

Latest submissions

Identify user photos in the marketplace

Latest submissions

A benchmark for image-based food recognition

Latest submissions

See All| failed | 177330 | ||

| graded | 177329 | ||

| graded | 177328 |

Using AI For Building’s Energy Management

Latest submissions

What data should you label to get the most value for your money?

Latest submissions

See All| graded | 177046 | ||

| failed | 176542 | ||

| failed | 176404 |

Interactive embodied agents for Human-AI collaboration

Latest submissions

Behavioral Representation Learning from Animal Poses.

Latest submissions

See All| graded | 179081 | ||

| graded | 173187 | ||

| graded | 173085 |

Airborne Object Tracking Challenge

Latest submissions

See All| graded | 150618 | ||

| graded | 148372 | ||

| graded | 145797 |

ASCII-rendered single-player dungeon crawl game

Latest submissions

See All| submitted | 158196 | ||

| failed | 158192 | ||

| failed | 158191 |

Latest submissions

See All| graded | 180080 | ||

| graded | 180079 |

Training sample-efficient agents in Minecraft

Latest submissions

See All| graded | 158774 | ||

| graded | 147099 | ||

| graded | 141760 |

Improving the HTR output of Greek papyri and Byzantine manuscripts

Latest submissions

See All| graded | 188253 | ||

| graded | 188252 | ||

| graded | 188221 |

Machine Learning for detection of early onset of Alzheimers

Latest submissions

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

Sample Efficient Reinforcement Learning in Minecraft

Latest submissions

See All| graded | 150880 | ||

| failed | 149894 | ||

| failed | 149002 |

Measure sample efficiency and generalization in reinforcement learning using procedurally generated environments

Latest submissions

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

Self-driving RL on DeepRacer cars - From simulation to real world

Latest submissions

Robustness and teamwork in a massively multiagent environment

Latest submissions

3D Seismic Image Interpretation by Machine Learning

Latest submissions

See All| graded | 82746 | ||

| graded | 82738 | ||

| graded | 82737 |

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

Latest submissions

See All| graded | 149881 | ||

| submitted | 149879 | ||

| failed | 144887 |

Play in a realistic insurance market, compete for profit!

Latest submissions

See All| graded | 113613 | ||

| graded | 110174 | ||

| graded | 110172 |

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

Multi-Agent Reinforcement Learning on Trains

Latest submissions

See All| failed | 84106 | ||

| failed | 84105 | ||

| failed | 84104 |

A dataset and open-ended challenge for music recommendation research

Latest submissions

A benchmark for image-based food recognition

Latest submissions

See All| graded | 59791 | ||

| failed | 59371 | ||

| failed | 31084 |

Latest submissions

See All| graded | 139559 | ||

| graded | 139558 | ||

| graded | 139557 |

Sample-efficient reinforcement learning in Minecraft

Latest submissions

See All| graded | 120070 | ||

| graded | 117123 | ||

| graded | 113473 |

Latest submissions

See All| graded | 125603 | ||

| graded | 125602 | ||

| graded | 125601 |

5 Puzzles, 3 Weeks. Can you solve them all? 😉

Latest submissions

Multi-agent RL in game environment. Train your Derklings, creatures with a neural network brain, to fight for you!

Latest submissions

Predicting smell of molecular compounds

Latest submissions

See All| failed | 114224 | ||

| failed | 114223 | ||

| failed | 114222 |

Classify images of snake species from around the world

Latest submissions

See All| graded | 60335 | ||

| graded | 60334 |

5 Puzzles 21 Days. Can you solve it all?

Latest submissions

Latest submissions

Robots that learn to interact with the environment autonomously

Latest submissions

See All| graded | 99115 | ||

| graded | 99114 | ||

| failed | 99109 |

5 Puzzles, 3 Weeks | Can you solve them all?

Latest submissions

See All| graded | 115551 | ||

| graded | 115537 | ||

| graded | 115536 |

5 PROBLEMS 3 WEEKS. CAN YOU SOLVE THEM ALL?

Latest submissions

Latest submissions

Grouping/Sorting players into their respective teams

Latest submissions

5 Problems 15 Days. Can you solve it all?

Latest submissions

Disentanglement: from simulation to real-world

Latest submissions

Sample-efficient reinforcement learning in Minecraft

Latest submissions

Multi Agent Reinforcement Learning on Trains.

Latest submissions

Latest submissions

See All| graded | 191700 | ||

| graded | 191699 | ||

| graded | 191698 |

Latest submissions

Visual SLAM in challenging environments

Latest submissions

Latest submissions

Latest submissions

Project 2: Road extraction from satellite images

Latest submissions

Project 2: build our own recommender system, and test its performance

Latest submissions

Project 2: build our own text classifier system, and test its performance.

Latest submissions

Help improve humanitarian crisis response through better NLP modeling

Latest submissions

See All| failed | 32245 |

Reincarnation of personal data entities in unstructured data sets

Latest submissions

Robots that learn to interact with the environment autonomously

Latest submissions

Immitation Learning for Autonomous Driving

Latest submissions

A new benchmark for Artificial Intelligence (AI) research in Reinforcement Learning

Latest submissions

Latest submissions

Predict if users will skip or listen to the music they're streamed

Latest submissions

Predict Heart Disease

Latest submissions

PlantVillage is built on the premise that all knowledge that helps people grow food should be openly accessible to anyone on the planet.

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Predict if users will skip or listen to the music they're streamed

Latest submissions

Recognizing bird sounds in monophone soundscapes

Latest submissions

Latest submissions

Latest submissions

Visual SLAM in challenging environments

Latest submissions

Latest submissions

Latest submissions

DA Final Project challenges for Monsoon 2020

Latest submissions

ACL-BioNLP Shared Task

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Identify which side has least players

Latest submissions

Predict viewer reactions from a large-scale video dataset!

Latest submissions

Latest submissions

Detect Multi-Animal Behaviors from a large, hand-labeled dataset.

Latest submissions

See All| failed | 125036 |

Reinforcement Learning, IIT-M, assignment 1

Latest submissions

Latest submissions

Latest submissions

Can you dock a spacecraft to ISS ?

Latest submissions

Latest submissions

Latest submissions

Latest submissions

Image-based plant identification at global scale

Latest submissions

Latest submissions

Localization, SLAM, Place Recognition, Visual Navigation, Loop Closure Detection

Latest submissions

Localization, SLAM, Place Recognition

Latest submissions

Round 2 - Active | Claim AWS Credits by beating the baseline

Latest submissions

See All| graded | 179081 |

A Challenge on Continual Learning using Real-World Imagery

Latest submissions

Latest submissions

Monocular Depth Estimation

Latest submissions

| Participant | Rating |

|---|---|

thomas_cloarec

thomas_cloarec

|

0 |

anssi

anssi

|

221 |

rohitmidha23

rohitmidha23

|

265 |

sanjaypokkali

sanjaypokkali

|

221 |

ayush_upadhyay

ayush_upadhyay

|

0 |

ashivani

ashivani

|

158 |

leocd

leocd

|

0 |

deepesh_grover

deepesh_grover

|

0 |

jyotish

jyotish

|

|

vrv

vrv

|

0 |

singstad90

singstad90

|

0 |

amitabh

amitabh

|

0 |

jerome_patel

jerome_patel

|

0 |

cadabullos

cadabullos

|

0 |

krishna_kaushik

krishna_kaushik

|

0 |

Victim

Victim

|

0 |

ReachAMY

ReachAMY

|

0 |

| Participant | Rating |

|---|---|

aicrowd-bot

aicrowd-bot

|

|

anssi

anssi

|

221 |

MasterScrat

MasterScrat

|

229 |

mohanty

mohanty

|

|

marcel

marcel

|

0 |

user18081971

user18081971

|

0 |

nikhil_rayaprolu

nikhil_rayaprolu

|

190 |

sauravkar

sauravkar

|

0 |

pulkit_gera

pulkit_gera

|

0 |

akshatcx

akshatcx

|

-39 |

akhilesh

akhilesh

|

0 |

piotrekpasciak

piotrekpasciak

|

|

yoogottamk

yoogottamk

|

245 |

hagrid67

hagrid67

|

103 |

shraddhaa_mohan

shraddhaa_mohan

|

272 |

aditya_morolia

aditya_morolia

|

0 |

sanjaypokkali

sanjaypokkali

|

221 |

nickinack

nickinack

|

0 |

rohitmidha23

rohitmidha23

|

265 |

shubham_sharma

shubham_sharma

|

0 |

kartik.gupta0204

kartik.gupta0204

|

0 |

shubhankar.sb

shubhankar.sb

|

0 |

vrv

vrv

|

0 |

ashivani

ashivani

|

158 |

leocd

leocd

|

0 |

michael_bordeleau

michael_bordeleau

|

0 |

dmytro_poplavskiy

dmytro_poplavskiy

|

0 |

michael_schmuker

michael_schmuker

|

0 |

jyotish

jyotish

|

-

AIcrowd Novartis DSAI ChallengeView

-

AIcrowd AIcrowd Blitz - May 2020View

-

food-shivam Food Recognition ChallengeView

-

AIcrowd FlatlandView

-

shivam AI for Good - AI Blitz #3View

-

AIcrowdHQ Insurance pricing gameView

-

shivam Seismic Facies Identification ChallengeView

-

AIcrowd NeurIPS 2021: MineRL Diamond CompetitionView

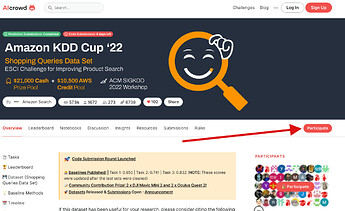

ESCI Challenge for Improving Product Search

No debug logs was given in my fail submission

Over 3 years agoHi @dami, I have shared the error and potential solution in your GitLab issue page given it includes information about your approach.

Let us know in case it helps. ![]()

You can reply back on the comment here or on the GitLab issue page.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @wac81, the leaderboard contains the best submission from each participants (not all the submissions). I assume that may have caused the confusion.

You can see all your submission here (they are merged for all the tasks in this challenge, but you can identify them using relevant repository name):

https://www.aicrowd.com/challenges/esci-challenge-for-improving-product-search/submissions?my_submissions=true

Best,

Shivam

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @vitor_amancio_jerony, thanks for the logs.

We have identified the bug during the evaluation phase which caused the models to load twice, and is now fixed.

We have also restarted your latest submission and monitoring if any similar error happens to it.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi all, in case you feel your submission is running quite slow online v/s your local setup.

It might be a good idea to verify torch or relevant packages are installed properly.

Here is an example for torch:

In case you are confused how to verify for your package, please let us know and we can release relevant FAQs.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @LYZD-fintech,

All the successful submissions would be considered for the final ranking in this challenge.

Best,

Shivam

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @LYZD-fintech,

Each submission has dedicated compute resources.

The time elapsed, that is reported on the issue page is currently wrong and will be fixed soon, it shows the total time from the submission to completion (instead of start of execution of your code to the end). The timeout however is properly implemented and only considers the running time.

We provision a new machine dynamically for each submission, due to which the time elapsed might have been higher when there are a high number of submissions in the evaluation queue (multiple machines got provisioned)

I hope that clears any confusion.

Best,

Shivam

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @wac81, your submissions have been evaluated.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @vitor_amancio_jerony @qinpersevere & all,

The image building code has been updated on our side, and the repository size is no longer a restriction.

Please keep in mind that the resources available for inference are same for everyone i.e. Tesla V100-SXM2-16GB.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @zhichao_feng, your submissions have failed due to 90 minutes timeout for each public & private phase runs. (it got 92% in 90m)

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @wac81,

The issue is resolved and I noticed you were able to make the submission just now.

Please let us know in case you face any other issue.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @animath3099, the issue has been resolved. You can try to make a submission now.

Note: Please remove debug: true from your aicrowd.json, due to which no slots remaining error is coming.

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @animath3099, can you share the link to the issue page? We are looking into it.

🚀 Datasets Released & Submissions Open 🚀

Over 3 years agoHi @olivertautz, that’s not the case, and any team member can submit in the challenge (not only the team member).

Can you please help by sharing relevant URL (Issue page, submission ID, etc) and/or a screenshot?

Fatal: pack exceeds maximum allowed size

Over 3 years agoHi @pn1,

Please use git-lfs to upload larger files in your repository.

The relevant commands are available in starter kit, or you can refer this for example.

🚀 Code Submission Round Launched 🚀

Over 3 years agoCan you check if weights can be pruned in your approach?

We are checking internally to increase the allowed submission/model size meanwhile, and will update you soon.

🚀 Datasets Released & Submissions Open 🚀

Over 3 years agoHi @olivertautz,

You can find the participate button on the challenge page.

https://www.aicrowd.com/challenges/esci-challenge-for-improving-product-search

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @qinpersevere,

We don’t want you to be restricted because of the submission limit.

Due to this, the submissions can have a maximum size of 10GB [to prevent abuse].

Along with it, the limit is only applied on the files present in your current tag (HEAD i.e. part of the submission). You only need to remove any unwanted [or old] models from your HEAD, without worrying about deleting them from git history, etc.

In case your usecase require more than 10GB, please let us know.

Best,

Shivam

cc: @xuange_cui, @TransiEnt, @ystkin, @masatoh

Frequently Asked Questions

How to install pytorch in your submissions? Can my pytorch use GPU?

Over 3 years agoPotential Issue:

In case the challenge you are participating has GPUs available during evaluation, and your submission is running slower than when you run it locally. One of the potential reason can be wrong torch version installed.

Why is this a common occurrence?

It is undoubtedly one of the most popular frameworks for the deep learning users, BUT there is a special caveat.

The PyPI releases for the PyTorch do not have GPU releases, and due to that it is easy to just add torch in your requirements.txt and end up using the CPU version of pytorch.

How to identify if you are using correct torch version?

In the build logs provided to you, please check the package downloaded.

For example below is a CPU version as it is missing cuXXX prefix:

Collecting torch

Downloading torch-1.12.0-cp37-cp37m-manylinux1_x86_64.whl

The GPU version examples:

torch-1.10.2-cu111-cp39-cp39-win_amd64.whl

torch-1.8.0-cu111-cp36-cp36m-linux_x86_64.whl

Package

torch

Usage:

>>> import torch

# you can add this in your code & print in the logs of your code as well to verify that everything works

>>> torch.cuda.is_available()

True

Solution

I am using requirements.txt. What should I do?

You can add extra-index-url before mentioning torch or at the top of your requirements.txt.

In case you want to point to a specific CUDA version and PyTorch version, the example is as follows (you can open the link to know if your pytorch version support your cuda version):

--extra-index-url https://download.pytorch.org/whl/cu111

torch==1.10.1+cu111

I am using environment.yml. What should I do?

The installation is a bit easier with Conda, and the sample file looks as follows. (note: you can mention python version of your choice, etc as well)

name: myenv

channels:

- conda-forge

- defaults

- pytorch

dependencies:

- python=3.8

- cudatoolkit=10.2 # cuda version

- pytorch=1.11 # torch version

- torchvision

- torchaudio

- pip:

- pandas

- <....other pip dependencies goes here....>

Notebooks

-

SequenceTagger Example It uses SuperPeitho-FLAIR-v2 provided in Ancient Greek BERTshivam· Over 3 years ago

SequenceTagger Example It uses SuperPeitho-FLAIR-v2 provided in Ancient Greek BERTshivam· Over 3 years ago -

🍕 Food Recognition Benchmark: Data Exploration & Baseline Dataset exploration and `detectron2` baseline training codeshivam· About 4 years ago

🍕 Food Recognition Benchmark: Data Exploration & Baseline Dataset exploration and `detectron2` baseline training codeshivam· About 4 years ago -

AOT Dataset walkthrough using helper scripts Demo of dataset helper scripts and walkthrough to the training datasetshivam· Over 4 years ago

AOT Dataset walkthrough using helper scripts Demo of dataset helper scripts and walkthrough to the training datasetshivam· Over 4 years ago -

Evaluate your predictions locally (validation flights) Demo on using metrics codebase for generating scores locallyshivam· Over 4 years ago

Evaluate your predictions locally (validation flights) Demo on using metrics codebase for generating scores locallyshivam· Over 4 years ago -

File name to frame metadata exploration Quick demo on how you can get metadata information of given frame image pathshivam· Over 4 years ago

File name to frame metadata exploration Quick demo on how you can get metadata information of given frame image pathshivam· Over 4 years ago -

BaseLine EfficientNet B0 224 Example of training data download via cli, with official baseline by Snakes Organisersshivam· Almost 5 years ago

BaseLine EfficientNet B0 224 Example of training data download via cli, with official baseline by Snakes Organisersshivam· Almost 5 years ago -

Download dataset in Colab/Notebook via CLI This notebook contain example on how to download dataset via notebook/colab using aicrowd-clishivam· Almost 5 years ago

Download dataset in Colab/Notebook via CLI This notebook contain example on how to download dataset via notebook/colab using aicrowd-clishivam· Almost 5 years ago -

Getting started with TIMSER (baseline notebook) Baseline containing code for submitting submissions for Time Series Prediction (AI Blitz 5)shivam· About 5 years ago

Getting started with TIMSER (baseline notebook) Baseline containing code for submitting submissions for Time Series Prediction (AI Blitz 5)shivam· About 5 years ago

Notebooks

-

🍕 Food Recognition Benchmark: Data Exploration & Baseline Dataset exploration and `detectron2` baseline training codeshivam· About 4 years ago

🍕 Food Recognition Benchmark: Data Exploration & Baseline Dataset exploration and `detectron2` baseline training codeshivam· About 4 years ago -

MMdetection training and submissions (Quick, Active) MMdetection training and evaluation code, getting 0.11 score on the leaderboardjerome_patel· Almost 4 years ago

MMdetection training and submissions (Quick, Active) MMdetection training and evaluation code, getting 0.11 score on the leaderboardjerome_patel· Almost 4 years ago -

Detectron2 training and submissions (Quick, Active) v2 of baseline, now supports submissions via Colab for both quick and active participationjerome_patel· About 4 years ago

Detectron2 training and submissions (Quick, Active) v2 of baseline, now supports submissions via Colab for both quick and active participationjerome_patel· About 4 years ago -

EDA, FE, HPO - All you need (LB: 0.640) Detailed EDA, FE with Class Balancing, Hyper-Parameter Optimization of XGBoost using Optunajyot_makadiya· Almost 5 years ago

EDA, FE, HPO - All you need (LB: 0.640) Detailed EDA, FE with Class Balancing, Hyper-Parameter Optimization of XGBoost using Optunajyot_makadiya· Almost 5 years ago -

Evaluate your predictions locally (validation flights) Demo on using metrics codebase for generating scores locallyshivam· Over 4 years ago

Evaluate your predictions locally (validation flights) Demo on using metrics codebase for generating scores locallyshivam· Over 4 years ago -

AOT Dataset walkthrough using helper scripts Demo of dataset helper scripts and walkthrough to the training datasetshivam· Over 4 years ago

AOT Dataset walkthrough using helper scripts Demo of dataset helper scripts and walkthrough to the training datasetshivam· Over 4 years ago

Gurgaon, IN

Gurgaon, IN

🚀 Code Submission Round Launched 🚀

Over 3 years agoHi @dami,

We have re-run these submissions along with the fix for your issue.

It should pass automatically or will show relevant logs in case of failure now on.